What if a surgical robot could watch itself move, and correct course in real time, without any external cameras or bulky sensors?

A team of researchers has made that idea a reality by giving a miniature, origami-inspired surgical robot its own built-in camera and vision system. The result: a compact tool that can guide itself with micron-level accuracy during delicate procedures inside the body.

A Self-Watching Robot for Microsurgery

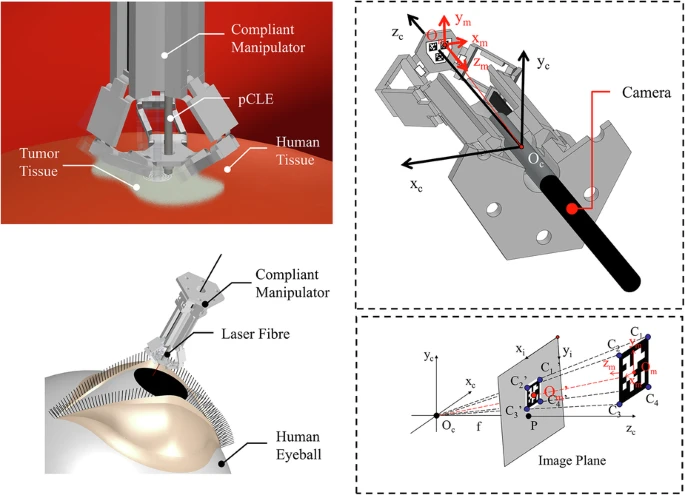

In a new study published in Microsystems & Nanoengineering, researchers from Imperial College London and the University of Glasgow introduced a robotic system that combines piezoelectric actuation with internal visual feedback for micromotion control. The design is especially suited for catheter-based diagnosis, laser resection, and probe-based endomicroscopy, where space is tight and precision is critical.

Lead author Xu Chen and colleagues described the robot as the first to use fully onboard visual servoing. Rather than relying on external motion-tracking cameras or complex force sensors, this microrobot houses a miniaturized endoscope camera underneath its platform. It watches the position of printed AprilTag markers—square visual codes—and adjusts its motion accordingly using a feedback loop.

How It Works

The robot is based on a delta configuration, known for its planar stability and fast, precise movements. Its flexible structure is 3D-printed in a flat sheet and folded like origami to form three arms driven by piezoelectric beams. These beams bend when voltage is applied, enabling the platform to move in three degrees of freedom: x, y, and z directions.

The real magic lies in how the system watches itself. A 3.9 mm borescope camera looks up at the AprilTags as the robot moves. A control algorithm compares the tags’ expected and observed positions and sends correction signals to the actuators. This closed-loop system significantly improves precision and stability, even when external forces like gravity act on the robot.

Key capabilities demonstrated:

- Motion accuracy of 7.5 μm

- Motion precision of 8.1 μm

- Trajectory resolution of 10 μm

- Stable performance under added loads and gravitational shifts

Why This Matters

Traditional feedback systems for microrobots tend to be bulky, wired, or dependent on environmental sensors. These make them impractical for real-world surgery, especially when operating inside the human body. By putting the camera and control system inside the robot, the new design solves many of these challenges at once.

“Our approach allows a surgical microrobot to track and adjust its own motion without relying on external infrastructure,” said Xu Chen. “By integrating vision directly into the robot, we achieve higher reliability, portability, and precision—critical traits for real-world medical applications.”

The use of internal visual feedback also reduces the system’s vulnerability to electrical and magnetic interference, which can plague traditional strain or force sensors. It supports electronic passivity at the robot’s tip—ideal for sterile or electromagnetically noisy environments.

Real-World Potential and Future Directions

The team tested the robot in several experiments, including tracing circular paths, adjusting to gravity-induced drift, and performing pCLE tissue scans. In each case, the robot’s internal camera and control algorithm kept it on target. Even with a 200 mg weight added to simulate gravitational pull, the onboard system restored the correct path within microns.

While the current design is limited by its 30 frames-per-second camera, future versions could benefit from faster imaging hardware. The authors suggest the Omnivision OH08A endoscope camera as a candidate for increasing speed and resolution, especially along the z-axis.

Because the robot’s structure is assembled using 3D printing and folding, it’s also scalable. The team estimates they can shrink the device by a factor of three using off-the-shelf components. With further miniaturization and higher-speed feedback, the system could be integrated into catheters, neurosurgical tools, or autonomous biopsy systems.

For now, this robot represents a new frontier: a self-seeing surgical assistant that doesn’t need a room full of external cameras to do its job right.

Journal and Citation

Journal: Microsystems & Nanoengineering

DOI: 10.1038/s41378-025-00955-x

Published: May 29, 2025

Authors: Xu Chen, Michail E. Kiziroglou, Eric M. Yeatman

Related

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!