Back in mid-September, a pair of Massachusetts lawmakers introduced a bill “to ensure the responsible use of advanced robotic technologies.” What that means in the simplest and most direct terms is legislation that would bar the manufacture, sale and use of weaponized robots.

It’s an interesting proposal for a number of reasons. The first is a general lack of U.S. state and national laws governing such growing concerns. It’s one of those things that has felt like science fiction to such a degree that many lawmakers had no interest in pursuing it in a pragmatic manner.

Of course, it isn’t just science fiction and hasn’t been for a long time. To put things bluntly, the United States has been using robots (drones) to kill people for more than 20 years. But as crass as this might sound, people tend to view these technologies very differently when it comes to their own backyard.

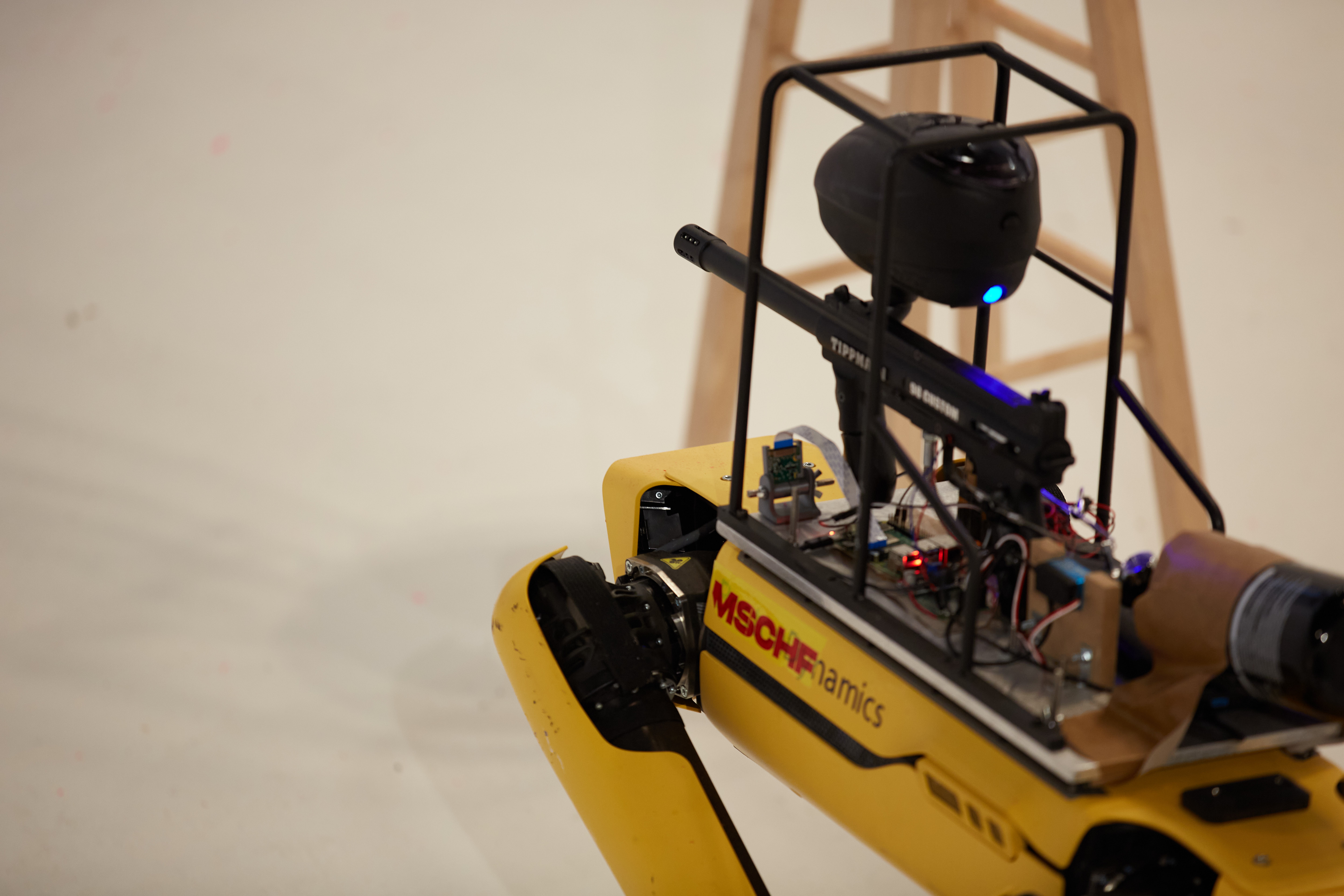

The concern about “killer robots” is, however, far more broad than just military applications. Some are, indeed, still based on your typical Terminators; I, Robots; and Five Nights at Freddy’s. Others are far more grounded. Remember when MSCHF mounted a paintball gun on a Spot to make a point? How about all of the images of Ghost Robots with sniper rifles?

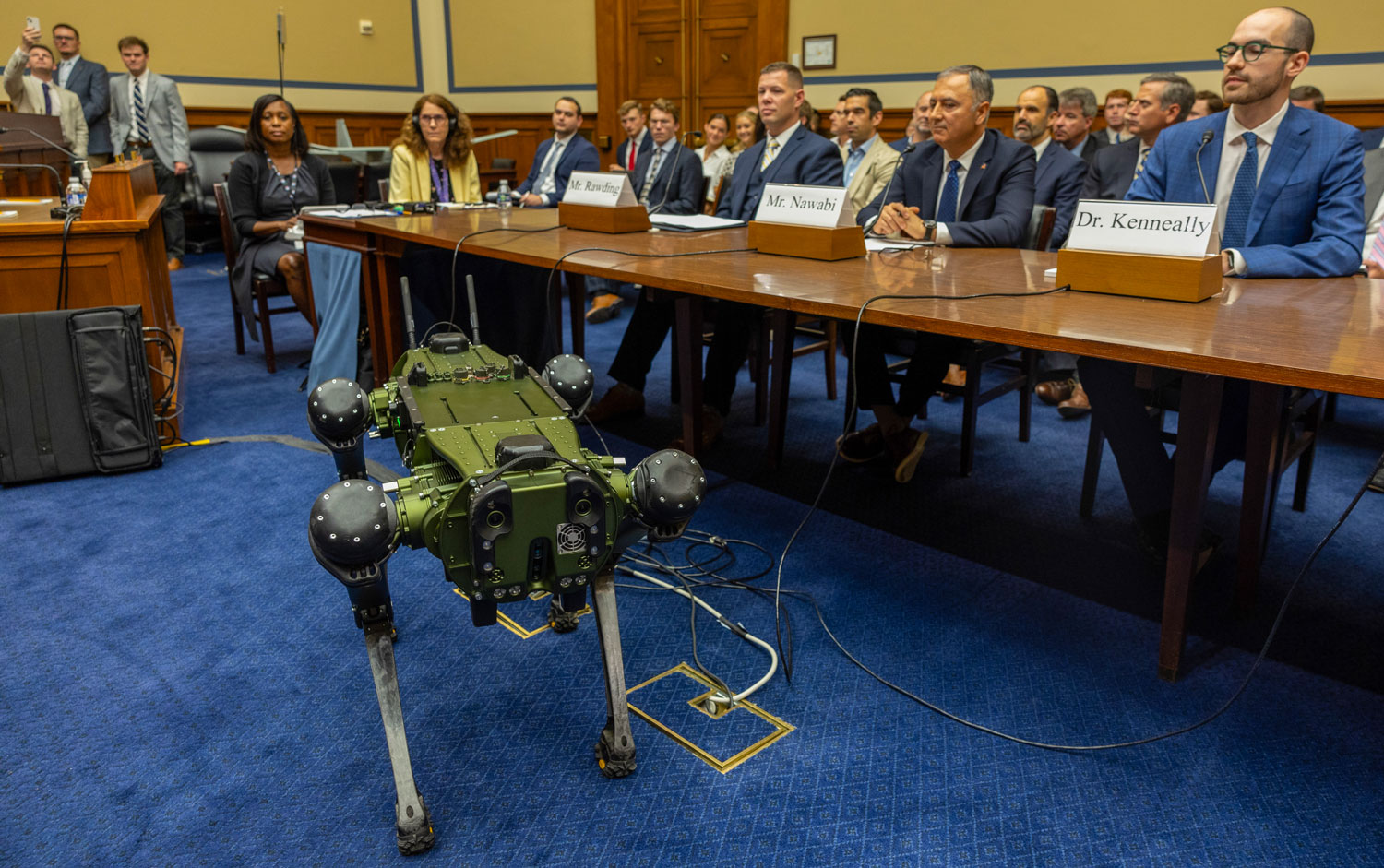

WASHINGTON, DC – JUNE 22: Gavin Kenneally, Chief Executive Officer at Ghost Robotics speaks as Vision 60 UGV walks in during a House hearing at the US Capitol on June 22, 2023 in Washington, DC. The House Committee on Oversight and Accountbility Subcommittee on Cybersecurity, Information Technology, and Government Innovation met to discuss the use of technology at the US Border, airports and military bases. (Photo by Tasos Katopodis/Getty Images)

While still not an everyday occurrence, there’s also a precedent for cops using robots to kill. The week of Independence Day 2016, the Dallas Police Department killed a suspect by mounting a bomb to a bomb disposal robot. Whatever you think about the wisdom and ethics of such a move, you can’t credibly argue that the robot was doing the job it was built for. Quite the opposite, in fact.

More recently, the potential use of weaponized robots by law enforcement has been a political lightning rod in places like Oakland and San Francisco. Last October, Boston Dynamics joined forces with Agility, ANYbotics, Clearpath Robotics and Open Robotics in signing an open letter condemning the weaponization of “general purpose” robots.

It read, in part:

We believe that adding weapons to robots that are remotely or autonomously operated, widely available to the public, and capable of navigating to previously inaccessible locations where people live and work, raises new risks of harm and serious ethical issues. Weaponized applications of these newly-capable robots will also harm public trust in the technology in ways that damage the tremendous benefits they will bring to society.

With that in mind, it shouldn’t come as a big surprise that Spot’s maker played a key role in planting the seed for this new proposed legislation. Earlier this week, I spoke about the bill with Massachusetts state representative Lindsay Sabadosa, who filed it alongside Massachusetts state senator Michael Moore.

MA Rep. Sabadosa

What is the status of the bill?

We’re in an interesting position, because there are a lot of moving parts with the bill. The bill has had a hearing already, which is wonderful news. We’re working with the committee on the language of the bill. They have had some questions about why different pieces were written as they were written. We’re doing that technical review of the language now — and also checking in with all stakeholders to make sure that everyone who needs to be at the table is at the table.

When you say “stakeholders” . . .

Stakeholders are companies that produce robotics. The robot Spot, which Boston Dynamics produces, and other robots as well, are used by entities like Boston Police Department or the Massachusetts State Police. They might be used by the fire department. So, we’re talking to those people to run through the bill, talk about what the changes are. For the most part, what we’re hearing is that the bill doesn’t really change a lot for those stakeholders. Really the bill is to prevent regular people from trying to weaponize robots, not to prevent the very good uses that the robots are currently employed for.

Does the bill apply to law enforcement as well?

We’re not trying to stop law enforcement from using the robots. And what we’ve heard from law enforcement repeatedly is that they’re often used to deescalate situations. They talk a lot about barricade situations or hostage situations. Not to be gruesome, but if people are still alive, if there are injuries, they say it often helps to deescalate, rather than sending in officers, which we know can often escalate the situation. So, no, we wouldn’t change any of those uses. The legislation does ask that law enforcement get warrants for the use of robots if they’re using them in place of when they would send in a police officer. That’s pretty common already. Law enforcement has to do that if it’s not an emergency situation. We’re really just saying, “Please follow current protocol. And if you’re going to use a robot instead of a human, let’s make sure that protocol is still the standard.”

I’m sure you’ve been following the stories out of places like San Francisco and Oakland, where there’s an attempt to weaponize robots. Is that included in this?

We haven’t had law enforcement weaponize robots, and no one has said, “We’d like to attach a gun to a robot” from law enforcement in Massachusetts. I think because of some of those past conversations there’s been a desire to not go down that route. And I think that local communities would probably have a lot to say if the police started to do that. So, while the legislation doesn’t outright ban that, we are not condoning it either.

Image Credits: MSCHF

There’s no attempt to get out ahead of it in the bill?

Not in the legislation. People using the dogs to hunt by attaching guns to them and things like that — that’s not something we want to see.

Is there any opposition currently?

We haven’t had any opposition to the legislation. We certainly had questions from stakeholders, but everything has been relatively positive. We’ve found most people — even with suggested tweaks to the legislation — feel like there’s common ground that we can come to.

What sorts of questions are you getting from the stakeholders?

Well, the first question we always get is, “Why is this important?”

You’d think that would be something the stakeholders would understand.

But a lot of times, [companies ask] what is the intent behind it? Is it because we’re trying to do something that isn’t obvious, or are we really just trying to make sure that there’s not misuse? I think Boston Dynamics is trying to say, “We want to get ahead of potential misuse of our robots before something happens.” I think that’s smart.

There hasn’t been pushback around questions of stifling innovation?

I don’t think so. In fact, I think the robotics trade association is on board. And then, of course, Boston Dynamics is really leading the charge on this. We’ve gotten thank-you notes from companies, but we haven’t gotten any pushback from them. And our goal is not to stifle innovation. I think there’s lots of wonderful things that robots will be used for. I appreciate how they can be used in situations that would be very unsafe for humans. But I don’t think attaching guns to robots is really an area of innovation that is being explored by many companies.

An aerial general view during a game between the Boston Red Sox and the New York Yankees on August 13, 2022 at Fenway Park in Boston, Massachusetts.(Photo by Billie Weiss/Boston Red Sox/Getty Images)

Massachusetts is a progressive state, but it’s interesting that it’s one of the first to go after a bill like this, since Boston is one of the world’s top robotics hubs.

That’s why we wanted to be the first to do it. I’m hopeful that we will be the first to get the legislation across the finish line, too. You asked if it was stifling innovation. I’ve argued that this bill helps, because it gives companies this modicum of safety to say, “We’re not producing these products for nefarious purposes. This innovation is really good.” I’ve heard people say that we need to be careful. That roboticists are just trying to create robocops. That’s not what these companies are doing. They’re trying to create robots for very specific situations that can be very useful and help save human lives. So I think that’s worthy. We view this as supporting the robotics industry, rather than trying to hamper it.

Were those stories out of places like San Francisco and Oakland an inspiration behind the bill’s creation?

Honestly, I think they were for Boston Dynamics. They sought us out.

So, Boston Dynamics spurred the initial conversation?

Yes, which is, from my perspective, why this is a bill that is helping, rather than hindering.

A version of this piece first appeared in TechCrunch’s robotics newsletter, Actuator. Subscribe here.