Three years ago one of us, Toni, asked another of us, Marco, to come to his office at the Institute of Photonic Sciences, a large research center in Castelldefels near Barcelona. “There is a problem that I wanted to discuss with you,” Toni began. “It is a problem that Miguel and I have been trying to solve for years.” Marco made a curious face, so Toni posed the question: “Can standard quantum theory work without imaginary numbers?”

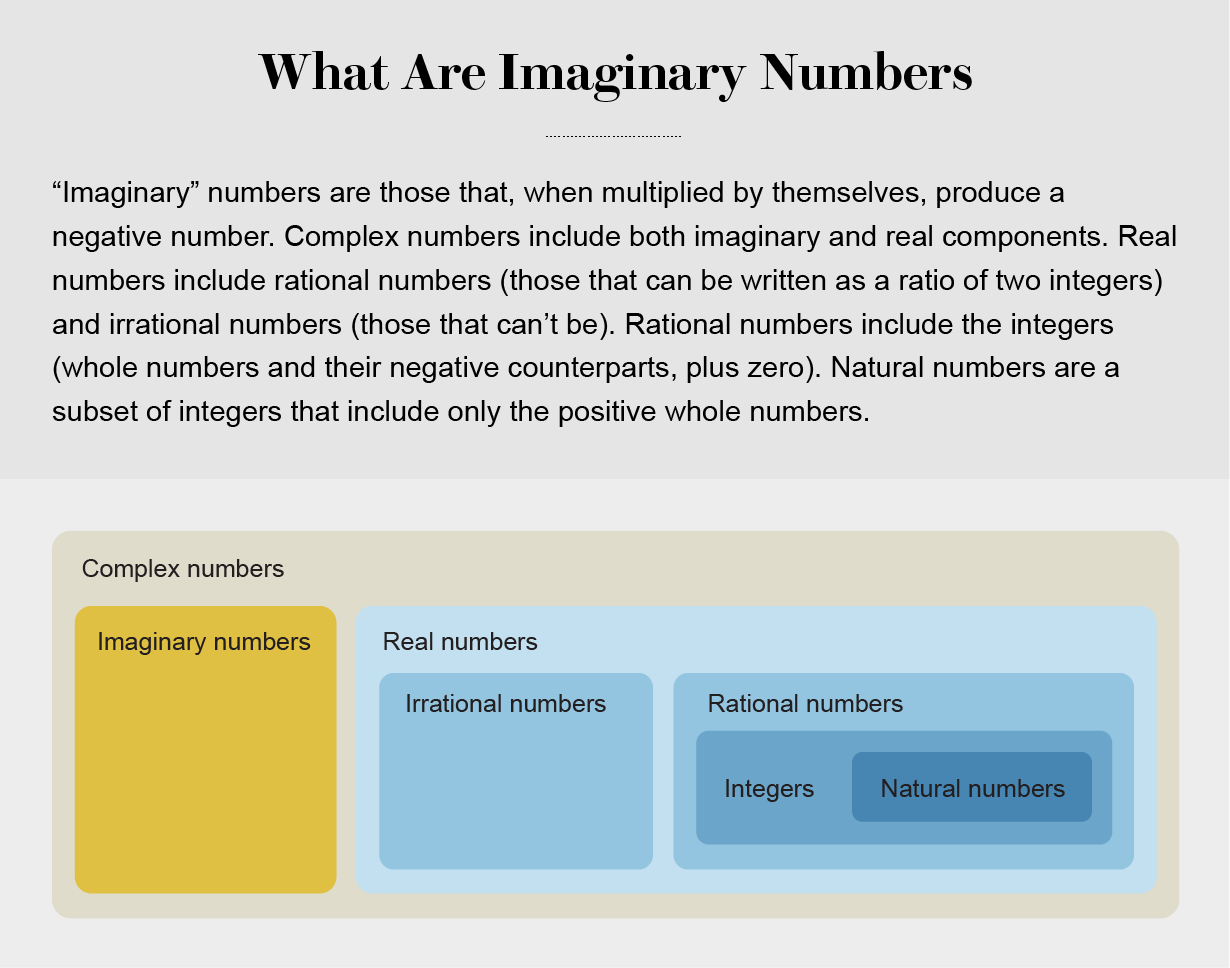

Imaginary numbers, when multiplied by themselves, produce a negative number. They were first named “imaginary” by philosopher René Descartes, to distinguish them from the numbers he knew and accepted (now called the real numbers), which did not have this property. Later, complex numbers, which are the sum of a real and an imaginary number, gained wide acceptance by mathematicians because of their usefulness for solving complicated mathematical problems. They aren’t part of the equations of any fundamental theory of physics, however—except for quantum mechanics.

The most common version of quantum theory relies on complex numbers. When we restrict the numbers appearing in the theory to the real numbers, we arrive at a new physical theory: real quantum theory. In the first decade of the 21st century, several teams showed that this “real” version of quantum theory could be used to correctly model the outcomes of a large class of quantum experiments. These findings led many scientists to believe that real quantum theory could model any quantum experiment. Choosing to work with complex instead of real numbers didn’t represent a physical stance, scientists thought; it was just a matter of mathematical convenience.

Still, that conjecture was unproven. Could it be false? After that conversation in Toni’s office, we started on a months-long journey to refute real quantum theory. We eventually came up with a quantum experiment whose results cannot be explained through real quantum models. Our finding means that imaginary numbers are an essential ingredient in the standard formulation of quantum theory: without them, the theory would lose predictive power. What does this mean? Does this imply that imaginary numbers exist in some way? That depends on how seriously one takes the notion that the elements of the standard quantum theory, or any physical theory, “exist” as opposed to their being just mathematical recipes to describe and make predictions about experimental observations.

The Birth of Imaginary Numbers

Complex numbers date to the early 16th century, when Italian mathematician Antonio Maria Fiore challenged professor Niccolò Fontana “Tartaglia” (the stutterer) to a duel. In Italy at that time, anyone could challenge a mathematics professor to a “math duel,” and if they won, they might get their opponent’s job. As a result, mathematicians tended to keep their discoveries to themselves, deploying their theorems, corollaries and lemmas only to win intellectual battles.

From his deathbed, Fiore’s mentor, Scipione del Ferro, had given Fiore a formula for solving equations of the form x3 + ax = b, also known as cubic equations. Equipped with his master’s achievement, Fiore presented Tartaglia with 30 cubic equations and challenged him to find the value of x in each case.

Tartaglia discovered the formula just before the contest, solved the problems and won the duel. Tartaglia later confided his formula to physician and scientist Gerolamo Cardano, who promised never to reveal it to anyone. Despite his oath, though, Cardano came up with a proof of the formula and published it under his name. The complicated equation contained two square roots, so it was understood that, should the numbers within be negative, the equation would have no solutions, because there are no real numbers that, when multiplied by themselves, produce a negative number.

In the midst of these intrigues, a fourth scholar, Rafael Bombelli, made one of the most celebrated discoveries in the history of mathematics. Bombelli found solvable cubic equations for which the del Ferro-Tartaglia-Cardano formula nonetheless required computing the square root of a negative number. He then realized that, for all these examples, the formula gave the correct solution, as long as he pretended that there was a new type of number whose square equaled −1. Assuming that every variable in the formula was of the form a + √−1 x b with a and b being “normal” numbers, the terms multiplying √−1 canceled out, and the result was the “normal” solution of the equation.

For the next few centuries mathematicians studied the properties of all numbers of the form a + √−1 x b, which were called “complex.” In the 17th century Descartes, considered the father of rational sciences, associated these numbers with nonexistent features of geometric shapes. Thus, he named the number i = √−1 “imaginary,” to contrast it with what he knew as the normal numbers, which he called “real.” Mathematicians still use this terminology today.

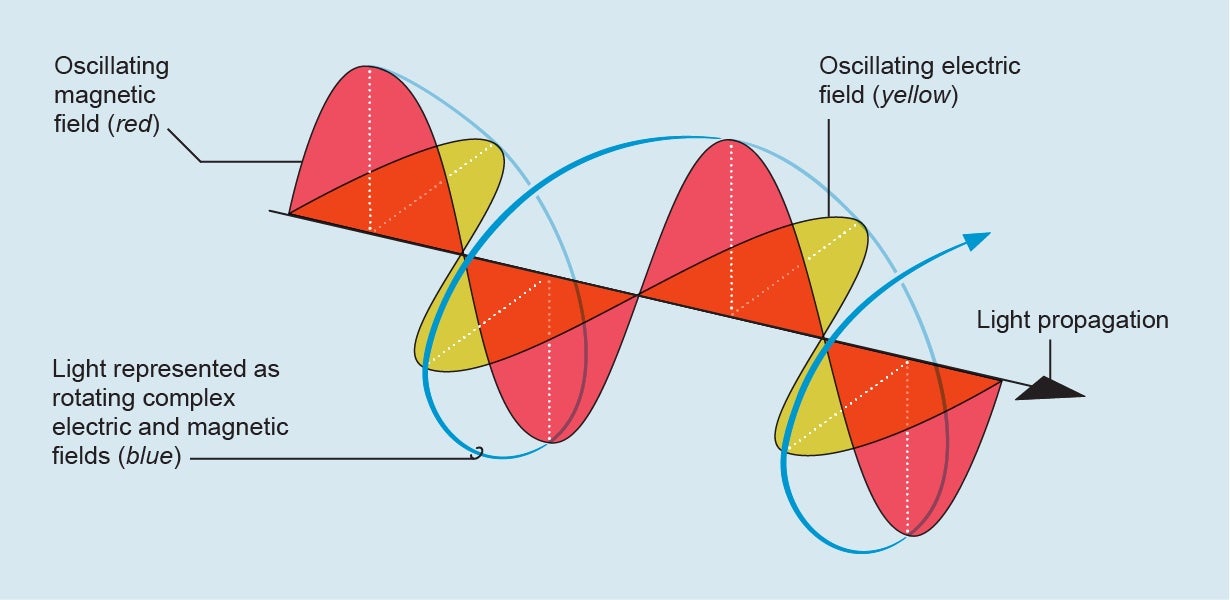

Complex numbers turned out to be a fantastic tool, not only for solving equations but also for simplifying the mathematics of classical physics—the physics developed up until the 20th century. An example is the classical understanding of light. It is easier to describe light as rotating complex electric and magnetic fields than as oscillating real ones, despite the fact that there is no such thing as an imaginary electric field. Similarly, the equations that describe the behavior of electronic circuits are easier to solve if you pretend electric currents have complex values, and the same goes for gravitational waves.

Before the 20th century all such operations with complex numbers were simply considered a mathematical trick. Ultimately the basic elements of any classical theory—temperatures, particle positions, fields, and so on—corresponded to real numbers, vectors or functions. Quantum mechanics, a physical theory introduced in the early 20th century to understand the microscopic world, would radically challenge this state of affairs.

Schrödinger and His Equation

In standard quantum theory, the state of a physical system is represented by a vector (a quantity with a magnitude and direction) of complex numbers called the wave function. Physical properties, such as the speed of a particle or its position, correspond to tables of complex numbers called operators. From the start, this deep reliance on complex numbers went against deeply held convictions that physical theories must be formulated in terms of real magnitudes. Erwin Schrödinger, author of the Schrödinger equation that governs the wave function, was one of the first to express the general dissatisfaction of the physics community. In a letter to physicist Hendrik Lorentz on June 6, 1926, Schrödinger wrote, “What is unpleasant here, and indeed directly to be objected to, is the use of complex numbers. Ψ [the wave function] is surely fundamentally a real function.”

At first, Schrödinger’s uneasiness seemed simple to resolve: he rewrote the wave function, replacing a single vector of complex numbers with two real vectors. Schrödinger insisted this version was the “true” theory and that imaginary numbers were merely for convenience. In the years since, physicists have found other ways to rewrite quantum mechanics based on real numbers. But none of these alternatives has ever stuck. Standard quantum theory, with its complex numbers, has a convenient rule that makes it easy to represent the wave function of a quantum system composed of many independent parts—a feature that these other versions lack.

What happens, then, if we restrict wave functions to real numbers and keep the usual quantum rule for composing systems with many parts? At first glance, not much. When we demand that wave functions and operators have real entries, we end up with what physicists often call “real quantum theory.” This theory is similar to standard quantum theory: if we lived in a real quantum world, we could still carry out quantum computations, send secret messages to one another by exchanging quantum particles, and teleport the physical state of a subatomic system over intercontinental distances.

All these applications are based on the counterintuitive features of quantum theory, such as superpositions, entanglement and the uncertainty principle, which are also part of real quantum theory. Because this formulation included these famed quantum features, physicists long assumed that the use of complex numbers in quantum theory was fundamentally a matter of convenience, and real quantum theory was just as valid as standard quantum theory. Back on that autumn morning in 2020 in Marco’s office, however, we began to doubt it.

Falsifying Real Quantum Theory

When designing an experiment to refute real quantum theory, we couldn’t make any assumptions about the experimental devices scientists might use, as any supporter of real quantum theory could always challenge them. Suppose, for example, that we built a device meant to measure the polarization of a photon. An opponent could argue that although we thought we measured polarization, our apparatus actually probed some other property—say, the photon’s orbital angular momentum. We have no way to know that our tools do what we think they do. Yet falsifying a physical theory without assuming anything about the experimental setup sounds impossible. How can we prove anything when there are no certainties to rely on? Luckily, there was a historical precedent.

Despite being one of quantum theory’s founders, Albert Einstein never believed our world to be as counterintuitive as the theory suggested. He thought that although quantum theory made accurate predictions, it must be a simplified version of a deeper theory in which its apparently paradoxical peculiarities would be resolved. For instance, Einstein refused to believe that Heisenberg’s uncertainty principle—which limits how much can be known about a particle’s position and speed—was fundamental. Instead he conjectured that the experimentalists of his time were not able to prepare particles with well-defined positions and speeds because of technological limitations. Einstein assumed that a future “classical” theory (one where the physical state of an elementary particle can be fully determined and isn’t based on probabilities) would account for the outcomes of all quantum experiments.

We now know that Einstein’s intuition was wrong because all such classical theories have been falsified. In 1964 John S. Bell showed that some quantum effects can’t be modeled by any classical theory. He envisioned a type of experiment, now called a Bell test, that involves two experimentalists, Alice and Bob, who work in separate laboratories. Someone in a third location sends each of them a particle, which they measure independently. Bell proved that in any classical theory with well-defined properties (the kind of theory Einstein hoped would win out), the results of these measurements obey some conditions, known as Bell’s inequalities. Then, Bell proved that these conditions are violated in some setups in which Alice and Bob measure an entangled quantum state. The important property is that Bell’s inequalities hold for all classical theories one can think of, no matter how convoluted. Therefore, their violation refuted all such theories.

Various Bell tests performed in labs since then have measured just what quantum theory predicts. In 2015 Bell experiments done in Delft, Netherlands, Vienna, Austria, and Boulder, Colo., finally did so while closing all the loopholes previous experiments had left open. Those results do not tell us that our world is quantum; rather they prove that, contra Einstein, it cannot be ruled by classical physics.

Could we devise an experiment similar to Bell’s that would rule out quantum theory based on real numbers? To achieve this feat, we first needed to envision a standard quantum theory experiment whose outcomes cannot be explained by the mathematics of real quantum theory. We planned to first design a gedankenexperiment—a thought experiment—that we hoped physicists would subsequently carry out in a lab. If it could be done, we figured, this test should convince even the most skeptical supporter that the world is not described by real quantum theory.

Our first, simplest idea was to try to upgrade Bell’s original experiment to falsify real quantum theory, too. Unfortunately, two independent studies published in 2008 and 2009—one by Károly Pál and Tamás Vértesi and another by Matthew McKague, Michele Mosca and Nicolas Gisin—found this wouldn’t work. The researchers were able to show that real quantum theory could predict the measurements of any possible Bell test just as well as standard quantum theory could. Because of their research, most scientists concluded that real quantum theory was irrefutable. But we and our co-authors proved this conclusion wrong.

Designing the Experiment

Within two months of our conversation in Castelldefels, our little project had gathered eight theoretical physicists, all based there or in Geneva or Vienna. Although we couldn’t meet in person, we exchanged e-mails and held online discussions many times a week. It was through a combination of long solitary walks and intensive Zoom meetings that on one happy day of November 2020 we came up with a standard quantum experiment that real quantum theory could not model. Our key idea was to abandon the standard Bell scenario, in which a single source distributes particles to several separate parties, and consider a setup with several independent sources. We had observed that, in such a scenario, which physicists call a quantum network, the Pál-Vértesi-McKague-Mosca-Gisin method could not reproduce the experimental outcomes predicted by complex number quantum theory. This was a promising start, but it was not enough: similarly to what Bell achieved for classical theories, we needed to rule out the existence of any form of real quantum theory, no matter how clever or sophisticated, that could explain the results of quantum network experiments. For this, we needed to devise a concrete gedankenexperiment in a quantum network and show that the predictions of standard quantum theory were impossible to model with real quantum theory.

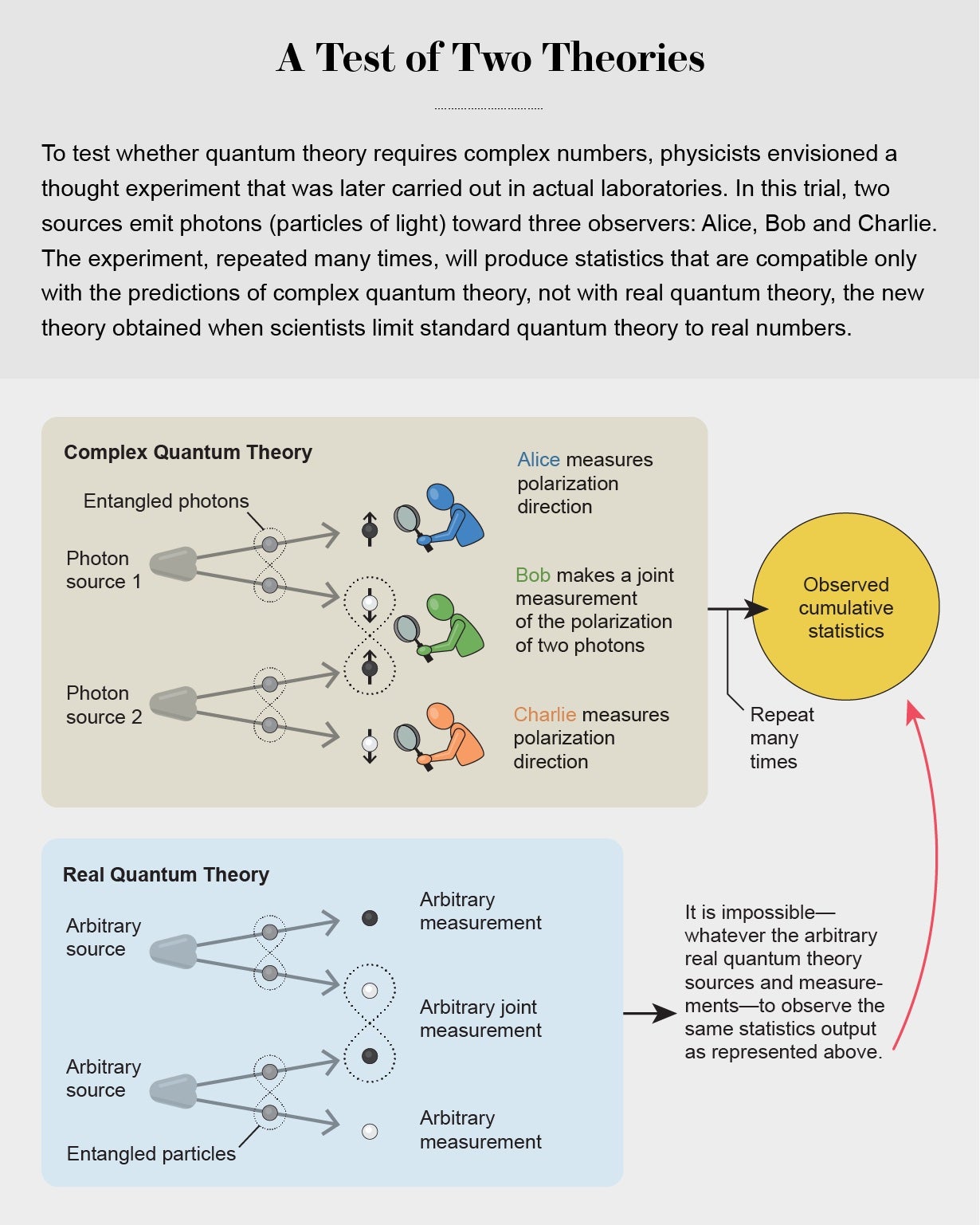

Initially we considered complicated networks involving six experimentalists and four sources. In the end, however, we settled for a simpler quantum experiment with three separate experimenters called Alice, Bob and Charlie and two independent particle sources. The first source sends out two particles of light (photons), one to Alice and one to Bob; the second one sends photons to Bob and Charlie. Next, Alice and Charlie choose a direction in which to measure the polarization of their particles, which can turn out to be “up” or “down.” Meanwhile Bob measures his two particles. When we do this over and over again, we can build up a set of statistics showing how often the measurements correlate. These statistics depend on the directions Alice and Charlie choose.

Next, we needed to show that the observed statistics could not be predicted by any real quantum system. To do so, we relied on a powerful concept known as self-testing, which allows a scientist to certify both a measurement device and the system it’s measuring at once. What does that mean? Think of a measurement apparatus, for instance, a weight scale. To guarantee that it’s accurate, you need to test it with a mass of a certified weight. But how to certify this mass? You must use another scale, which itself needs to be certified, and so on. In classical physics, this process has no end. Astonishingly, in quantum theory, it’s possible to certify both a measured system and a measurement device simultaneously, as if the scale and the test mass were checking each other’s calibration.

With self-testing in mind, our impossibility proof worked as follows. We conceived of an experiment in which, for any of Bob’s outcomes, Alice and Charlie’s measurement statistics self-tested their shared quantum state. In other words, the statistics of one confirmed the quantum nature of the other, and vice versa. We found that the only description of the devices that was compatible with real quantum theory had to be precisely the Pál-Vértesi-McKague-Mosca-Gisin version, which we already knew didn’t work for a quantum network. Hence, we arrived at the contradiction we were hoping for: real quantum theory could be falsified.

We also found that as long as any real-world measurement statistics observed by Alice, Bob and Charlie were close enough to those of our ideal gedankenexperiment, they could not be reproduced by real quantum systems. The logic was very similar to Bell’s theorem: we ended up deriving a Bell’s inequality for real quantum theory and proving that it could be violated by complex quantum theory, even in the presence of noise and imperfections. That allowance for noise is what makes our result testable in practice. No experimentalists ever achieve total control of their lab; the best they can hope for is to prepare quantum states that are approximately what they were aiming for and to make approximately the measurements they intended, which will allow them to generate approximately the same measurement statistics that were predicted. The good news is that within our proof, the experimental precision required to falsify real quantum theory, though demanding, was within reach of current technologies. When we announced our results, we hoped it was just a matter of time before someone, somewhere, would realize our vision.

It happened quickly. Just two months after we made our discovery public, an experimental group in Shanghai reported implementing our gedankenexperiment with superconducting qubits—computer bits made of quantum particles. Around the same time, a group in Shenzhen also contacted us to discuss carrying out our gedankenexperiment with optical systems. Months later, we read about yet another optical version of the experiment, also conducted in Shanghai. In each case, the experimenters observed correlations between the measurements that real quantum theory could not account for. Although there are still a few experimental loopholes to take care of, taken together these three experiments make the real quantum hypothesis very difficult to sustain.

The Quantum Future

We now know neither classical nor real quantum theory can explain certain phenomena, so what comes next? If future versions of quantum theory are proposed as alternatives to the standard theory, we could use a similar technique to try to exclude them as well. Could we go one step further and falsify standard quantum theory itself?

If we did, we would be left with no theory for the microscopic world given that we currently lack an alternative. But physicists are not convinced that standard quantum theory is true. One reason is that it seems to conflict with one of our other theories, general relativity, used to describe gravity. Scientists are seeking a new, deeper theory that could reconcile these two and perhaps replace standard quantum theory. If we could ever falsify quantum theory, we might be able to point the way toward that deeper theory.

In parallel, some researchers are trying to prove that no theory other than quantum will do. One of our co-authors, Mirjam Weilenmann, in collaboration with Roger Colbeck, recently argued that it may be possible to discard all alternative physical theories through suitable Bell-like experiments. If this were true, then those experiments would show that quantum mechanics is indeed the only physical theory compatible with experimental observations. The possibility makes us shiver: Can we really hope to demonstrate that quantum theory is so special?

Editor’s Note (4/6/23): The box “What Are Imaginary Numbers?” was revised after posting to correct the description of how rational numbers include the integers. The article was previously amended on March 24 to add a caption to the opening illustration to correct two equations.