What would you do with an extra pair of hands? The idea might sound unwieldy, like too much of a good thing. But a new study suggests that people can in fact adapt to using additional robotic arms as if the limbs were their own body parts.

For decades, scientists have been investigating how human brains act when people manipulate tools. It is now thought that when you pick up a wrench or a screwdriver, your brain interprets it as a substitute for your own hand. When you wield a long stick, your sense of personal space extends to accommodate the object’s full length so that you don’t accidentally whack someone with it when you turn around. But what happens to your perception of your own body when you add entirely new parts rather than just changing the function, shape or size of existing ones?

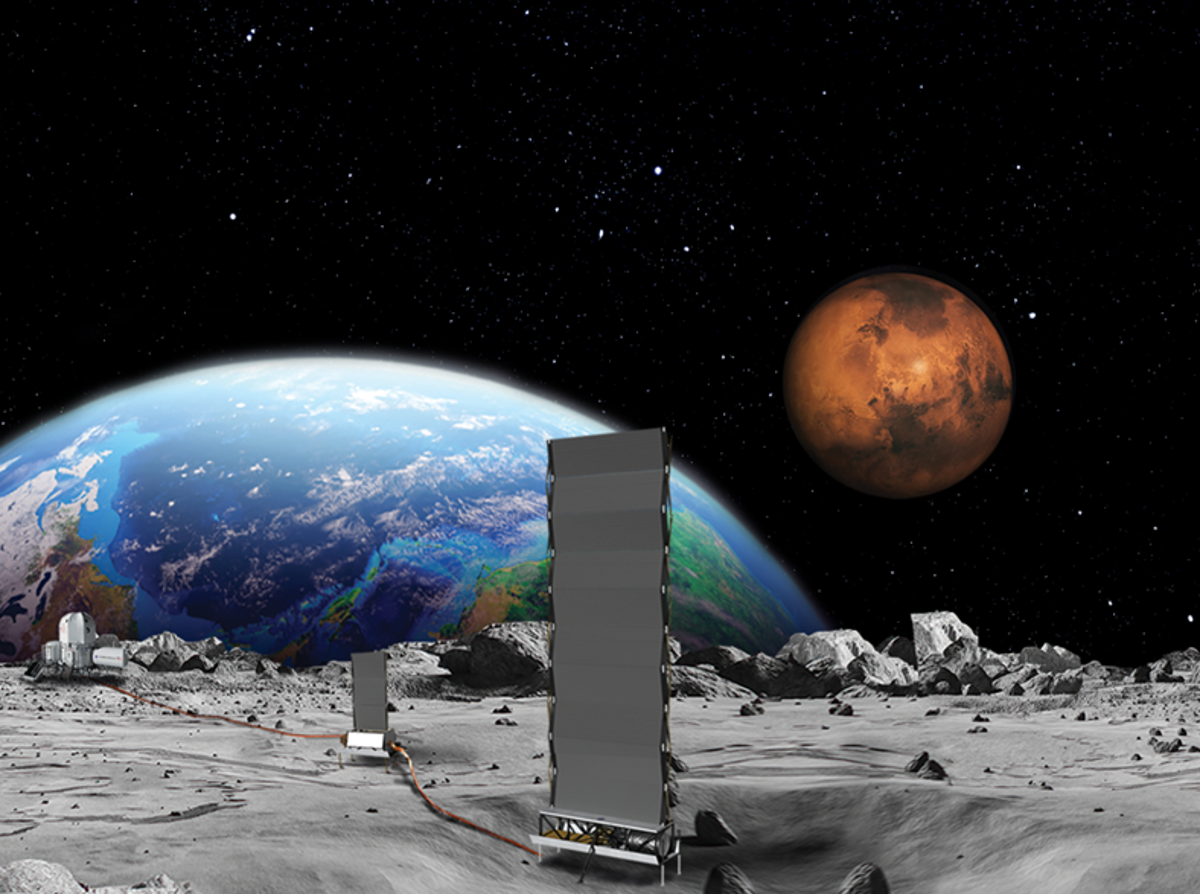

That question could influence the design of new robotic devices and virtual reality spaces. Many roboticists are interested in building systems that would give humans the ability to use additional limbs and potentially enable people to complete tasks that require an extra arm or leg—or even a tail. Virtual reality provides an opportunity for people to try experiences that aren’t yet possible in the real world and to act as avatars that might look nothing like their controllers (or even like humans at all). To be useful and usable, however, any additional real or virtual body parts need to blend in as if they’ve always been there. So understanding if and how this is possible is key for designing both real-life cyborg parts and immersive video games.

A variety of experiments have attempted to determine how humans respond to having an extra appendage. In these tests, researchers outfitted participants with an extra arm, hand or finger made of rubber, then touched the fake extra part at the same time as they touched a real, sensing one. These experiments showed that humans can feel like these extra, or “supernumerary,” limbs are a part of their body. But whether people can effectively use controllable sets of new limbs had not yet received much study.

This was the goal of new research published last month in Scientific Reports. Researchers immersed participants in a VR environment that included an avatar of themselves—with an extra pair of virtual robotic arms just below their real ones. The VR environment was crucial to understanding how humans might adapt to extra robotic body parts, says study co-author Ken Arai, a roboticist and cognitive scientist at the University of Tokyo. Experimenting with a real-world pair of robotic arms comes with the challenge of getting them to move without a delay, as our brain would expect from our real body parts. But in VR, the time lag between input from sensors to the visible movement of virtual arms is shorter, which makes the experience more true to life.

Participants controlled the simulated robo arms using sensors attached to their feet and waist. Moving the lower leg in the physical world would trigger the extra arm on that side of the body to move in VR space. Bending toes told that virtual hand to make a grabbing motion. People manipulating the arms in VR were also able to feel when the limbs interacted with virtual objects. For example, if the palm of one simulated robotic hand touched something in the VR space, participants felt a vibration against the sole of the foot on the same side of the body.

Once hooked into the VR setup, participants dove into a coordination task, using the extra arms to “touch” balls that appeared in random locations. After each attempt, participants rated how much they agreed with statements such as “I felt as if the virtual robot limbs/arms were my limbs/arms” and “I felt as if the movements of the virtual robot arm were influencing my own movements.” When they had completed the ball-touch task multiple times, people’s responses became faster—and they also reported feeling more ownership of and agency over their new arms.

Another experiment tested how quickly people moved their robotic arms in response to virtual touches. Here, participants felt vibrations on their feet while seeing virtual objects touch their artificial limbs, and they were instructed to move their robotic arms away from those objects. Sometimes the location of the physical vibration on the foot matched the location where the virtual ball touched the limb—for example, a vibration on the top of the left foot would indicate contact on the back of the virtual left hand—as they did in the ball-touch experiment. But sometimes the sensation didn’t match where the object appeared to be in VR. When the visible location of the VR object and the place it felt like the robotic limb was being touched lined up, participants jerked their robotic arms away slightly more quickly than they did when the sensation did not correspond to the object’s position. This pattern was also seen when the same experiment was carried out on people’s real-world limbs. The researchers interpret this as a sign that participants’ subconscious sense of personal space expanded to include the area visible around their robotic arms in VR.

Overall, the results suggest participants felt like they had acquired whole new body parts—not just like they had extended their existing feet by adding a new tool. This potentially opens up a world of virtual and real possibilities.

“In virtual reality, we can have avatars of any shape or dimension,” says Andrea Stevenson Won, a human-computer interaction researcher who runs the Virtual Embodiment Lab at Cornell University and was not involved in the study. “You could give yourself wings and fly around in a virtual space and get this euphoric experience.” Learning more about how people will feel about their avatars’ additional body parts will help researchers design that experience. “How people might interpret avatar bodies, which don’t exist physically, and react to them as though they were real in some sense is an interesting question,” Won adds.

Arai, on the other hand, is most excited about the potential to expand what humans might be able to do in the physical world. Existing robotics systems could literally lend people an extra pair of hands. “Maybe this knowledge [from the VR system] can be adapted to the actual robotic system as well. This kind of feedback loop will be very important to improve supernumerary robotic limb designs,” he says. “We want to enable impossible things for humans. If we want to add more limbs, that should be possible. Everything should be possible.”