The silicon lips moved precisely with each syllable, forming the rounded shape for “hello,” the closed position for “world.” For the first time, a robot had learned to synchronize speech and lip movement not through preprogrammed rules, but by watching itself in a mirror.

This isn’t just another incremental advance in robotics. It’s a fundamental shift in how machines might connect with us.

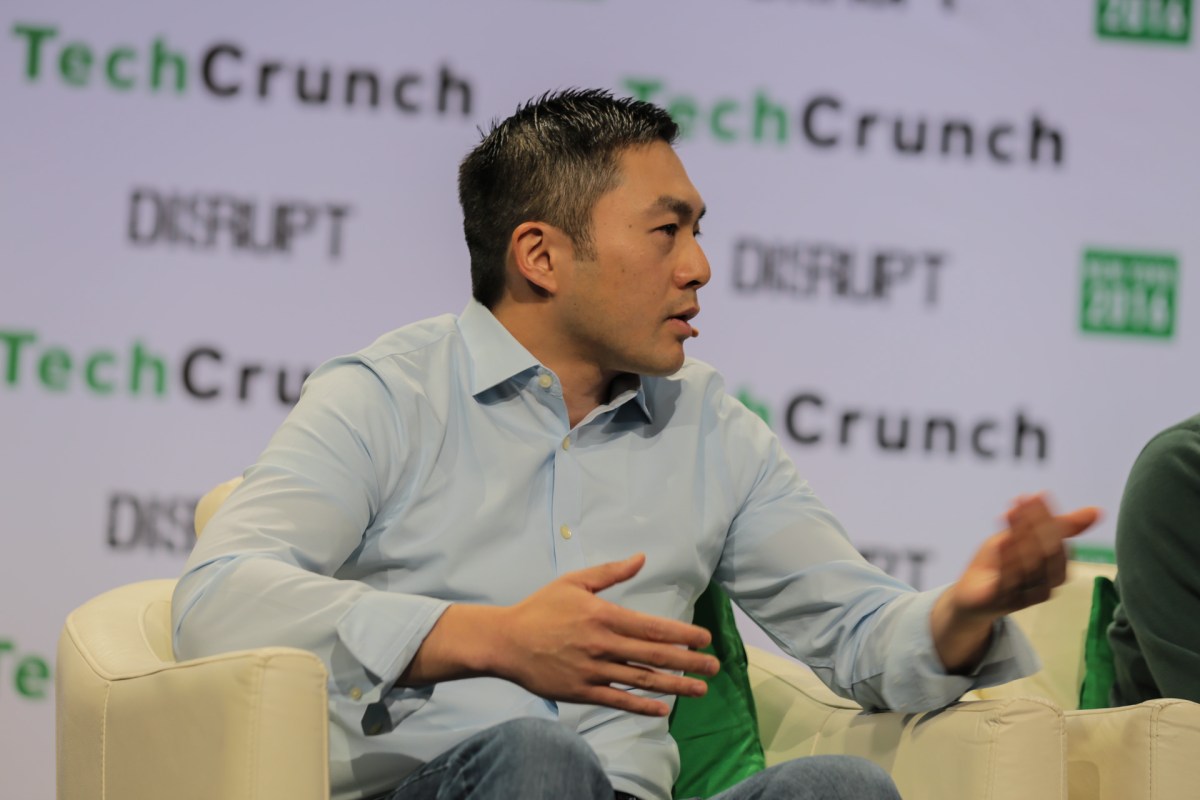

Hod Lipson’s lab at Columbia University has spent years trying to cross what roboticists call the “uncanny valley.” That’s the unsettling zone where humanoid robots look almost, but not quite, human enough. The problem has always been the face, and particularly the mouth. Even sophisticated humanoids tend to move their lips like muppets, opening and closing in rough approximation of speech. We humans, it turns out, are ruthlessly unforgiving of facial mistakes.

We attribute outsized importance to facial gestures, says Lipson, who directs Columbia’s Creative Machines Lab. The numbers bear this out. During face-to-face conversation, nearly half our visual attention focuses on lip motion. When lips don’t match speech, even by a fraction of a second, we notice immediately.

The challenge has two parts. First, you need intricate mechanical hardware: a flexible face with enough motors to form subtle shapes. Then comes the harder bit, teaching the robot which shapes to make and when. Traditional approaches involved manually programming lip movements for each phoneme. That’s a tedious process that produced stilted, unconvincing results. It’s rather like trying to teach locomotion through explicit rules instead of letting the robot learn to walk.

Lipson’s team took a different approach. They built a face with 10 degrees of freedom in the lips alone. Two motors for each corner, three for the upper lip, one for the jaw, and two for the lower lip. The corners can retract or protrude, allowing the tight lip seal needed for sounds like “b” and “p.” The system uses magnetic connectors that let the soft silicone skin attach precisely to the mechanical infrastructure beneath. This makes it easy to swap faces for rapid iteration.

Then they gave it a mirror. For hours, the robot made random facial movements (pouts, puckers, grimaces) while a camera recorded what each motor configuration produced. Like a baby discovering its own reflection, the robot learned which commands created which expressions. This self-model became the foundation for everything that followed.

The next step involved synthesized video. The team used existing AI tools to generate videos of the robot’s face speaking, lips perfectly synchronized with audio. These videos provided a target, the lip shapes the robot should aim for. But here’s the clever part: they didn’t try to directly control the motors based on sound. Instead, they trained a transformer network to watch the synthesized videos and figure out which motor commands would recreate those lip movements on the real robot.

The system can now speak in 10 languages it’s never been trained on. English, French, Japanese, Korean, Spanish, Italian, German, Russian, Chinese, Hebrew, and Arabic. The multilingual capability emerged almost by accident. Train predominantly on English speech patterns, it seems, and the underlying lip-audio relationships generalize surprisingly well across different phonetic systems.

There are still obvious limitations. Hard sounds like “b” give it trouble, as do shapes requiring lip puckering like “w.” The synchronization isn’t perfect. Human speakers typically begin shaping their lips 80 to 300 milliseconds before any sound emerges, a predictive ability the current system lacks. And the mechanical constraints of servo motors and elastic skin mean some movements remain kinematically difficult or impossible.

But Yuhang Hu, who led the research for his PhD, sees the deeper significance. When you combine this lip-sync capability with conversational AI like ChatGPT, the emotional connection changes. The robot becomes less tool, more presence. Which is precisely what worries them.

“This will be a powerful technology,” Lipson acknowledges. “We have to go slowly and carefully, so we can reap the benefits while minimizing the risks.”

As robots grow more adept at connecting with humans (through smiles, eye contact, speech), they could be exploited to gain trust from vulnerable people. Children and the elderly especially. Even well-meaning applications in healthcare or elder care could create problematic emotional dependencies.

Some economists estimate over a billion humanoid robots will be manufactured in the next decade. Most will need faces, Lipson argues, because humans are simply wired to respond to facial cues. We can’t help it. And faceless robots will forever remain uncanny.

The team recently released their robot’s debut music album. It’s an AI-generated collection called “hello world_” that demonstrates the system singing as well as speaking. It’s a peculiar milestone in robotics, this singing robot, but it points to something larger. For the first time, machines are learning to communicate in the full audiovisual channel that humans use, not just audio alone.

Whether we’re ready for robots that can smile at us, speak to us with properly synchronized lips, and connect with us on an emotional level remains an open question. But the technology is here. Lipson, who calls himself a jaded roboticist, admits he can’t help but smile back when the robot spontaneously smiles at him. Something magical happens, he says, when a robot learns these gestures by watching and listening to humans.

The uncanny valley might finally have a bridge across it. Whether we should cross remains to be seen.

If our reporting has informed or inspired you, please consider making a donation. Every contribution, no matter the size, empowers us to continue delivering accurate, engaging, and trustworthy science and medical news. Independent journalism requires time, effort, and resources—your support ensures we can keep uncovering the stories that matter most to you.

Join us in making knowledge accessible and impactful. Thank you for standing with us!