On October 8 the Nobel Prize in Physics was awarded for the development of machine learning. The next day, the chemistry Nobel honored protein structure prediction via artificial intelligence. Reaction to this AI–double whammy might have registered on the Richter scale.

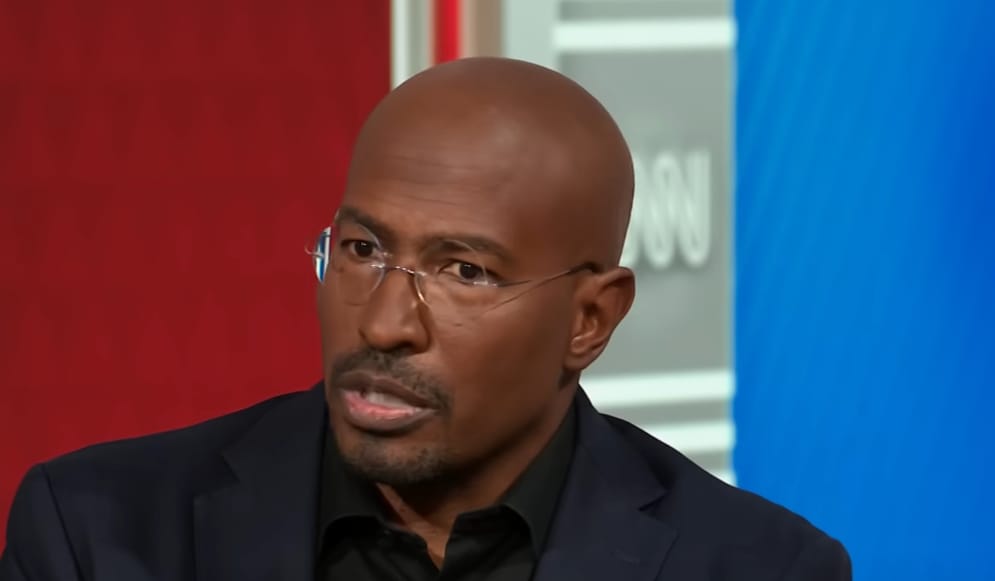

Some argued that the physics prize, in particular, was not physics. “A.I. is coming for science, too,” the New York Times concluded. Less moderate commenters went further: “Physics is now officially finished,” one onlooker declared on X (formerly Twitter). Future physics and chemistry prizes, a physicist joked, would inevitably be awarded to advances in machine learning. In a laconic email to the AP, newly anointed physics laureate and AI pioneer Geoffrey Hinton issued his own prognostication: “Neural networks are the future.”

For decades, AI research was a relatively fringe domain of computer science. Its proponents often trafficked in prophetic predictions that AI would eventually bring about the dawn of superhuman intelligence. Suddenly, within the past few years, those visions have become vivid. The advent of large language models with powerful generative capabilities has led to speculation about encroachment on all branches of human achievement. AIs can receive a prompt, spit out illustrated pictures, essays, solutions to complex math problems—and now, provide Nobel-winning discoveries. Have AIs taken over the science Nobels, and possibly science itself?

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Not so fast. Before we either happily swear fealty to our future benevolent computer overlords or eschew every technology since the pocket calculator (co-inventor Jack Kilby won part of the 2000 Physics Nobel, by the way), perhaps a bit of circumspection is in order.

To begin with, what were the Nobels really awarded for? The physics prize went to Hinton and John Hopfield, a physicist (and former president of the American Physical Society), who discovered how the physical dynamics of a network can encode memory. Hopfield came up with an intuitive analogy: a ball, rolling across a bumpy landscape, will often “remember” to return to the same lowest valley. Hinton’s work extended Hopfield’s model by showing how increasingly complex neural networks with hidden “layers” of artificial neurons can learn better. In short, the physics Nobel was awarded for fundamental research about the physical principles of information, not the broad umbrella of “AI” and its applications.

The chemistry prize, meanwhile, was half awarded to David Baker, a biochemist, while the other half went to two researchers at the AI company DeepMind: Demis Hassabis, a computer scientist and DeepMind’s CEO, and John Jumper, a chemist and DeepMind director. For proteins, form is function, their tangled skeins assembling into elaborate shapes that act as keys to fit into myriad molecular locks. But it has been extremely difficult to predict the emergent structure of a protein from its amino acid sequence—imagine trying to guess the way a length of chain will fold up. First Baker developed software to address this problem, including a program to design novel protein structures from scratch. Yet by 2018, of the roughly 200 million proteins cataloged in all genetic databases, only about 150,000, less than 0.1 percent, had confirmed structures. Then Hassabis and Jumper debuted AlphaFold in a predictive protein-folding challenge. Its first iteration beat the competition by a wide margin; the second provided highly accurate calculations of folding structures for the 200 million remaining proteins.

AlphaFold is “the ground-breaking application of AI in science” a 2023 review of protein folding stated. But even so, the AI has limitations; its second iteration failed to predict defects in proteins and struggled with “loops,” a kind of structure crucial for drug design. It’s not a panacea for each and every problem in protein folding, but rather a tool par excellence, akin to many others that have received prizes over the years: the 2014 physics prize for blue light diodes (in nearly every LED screen today) or the 2019 chemistry prize for lithium ion batteries (still essential, even in an age of phone flashlights).

Many of these tools have since disappeared into their uses. We rarely pause to consider the transistor (for which the 1956 physics prize was awarded) when we use electronics containing them by the billions. Some powerful machine-learning features are already on this path. The neural networks that provide accurate language translation or eerily apt song recommendations in popular consumer software programs are simply part of the service; the algorithm has faded into the background. In science, as in so many other domains, this trend suggests that when AI tools become commonplace, they will fade into the background, too.

Still a reasonable concern might then be that such automation, whether subtle or overt, threatens to supersede or sully the efforts of human physicists and chemists. As AI becomes integral to further scientific progress, will any prizes recognize work truly free of AI? “It is difficult to make predictions, especially about the future,” as many—including the Nobel-winning physicist Niels Bohr and the iconic baseball player, Yogi Berra—are reported to have said.

AI can revolutionize science; of that there is no doubt. It has already helped us see proteins with previously unimaginably clarity. Soon AIs may dream up new molecules for batteries, or find new particles hiding in data from colliders—in short, they may do many things, some of which previously seemed impossible. But they have a crucial limitation tied to something wonderful about science: its empirical dependence on the real world, which cannot be overcome by computation alone.

An AI, in some respects, can only be as good as the data it’s given. It cannot, for example, use pure logic to discover the nature of dark matter, the mysterious substance that makes up 80 percent of matter in the universe. Instead it will have to rely on observations from an ineluctably physical detector with components perennially in need of elbow grease. To discover the real world, we will always have to contend with such corporeal hiccups.

Science also needs experimenters—human experts driven to study the universe, and who will ask questions an AI cannot. As Hopfield himself explained in a 2018 essay, physics—science itself, really—is not a subject so much as “a point of view,” its core ethos being “that the world is understandable” in quantitative, predictive terms solely by virtue of careful experiment and observation.

That real world, in its endless majesty and mystery, still exists for future scientists to study, whether aided by AI or not.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.