An algorithm created by AI firm DeepMind can distinguish between videos in which objects obey the laws of physics and ones where they don’t

Technology

11 July 2022

Watching videos of objects interact helped an AI learn physics Audio und werbung/Shutterstock

Teaching artificial intelligence to understand simple physics concepts, such as that one solid object can’t occupy the same space as another, could lead to more capable software that takes less computational resources to train, say researchers at DeepMind.

The UK-based company has previously created AI that can beat expert players at chess and Go, write computer software and solve the protein-folding problem. But these models are highly specialised and lack a general understanding of the world. As DeepMind’s researchers say in their latest paper, “something fundamental is still missing”.

Now, Luis Piloto at DeepMind and his colleagues have created an AI called Physics Learning through Auto-encoding and Tracking Objects (PLATO) that is designed to understand that the physical world is composed of objects that follow basic physical laws.

The researchers trained PLATO to identify objects and their interactions by using simulated videos of objects moving as we would expect, such as balls falling to the ground, rolling behind each other and bouncing off each other. They also gave PLATO data showing exactly which pixels in every frame belonged to each object.

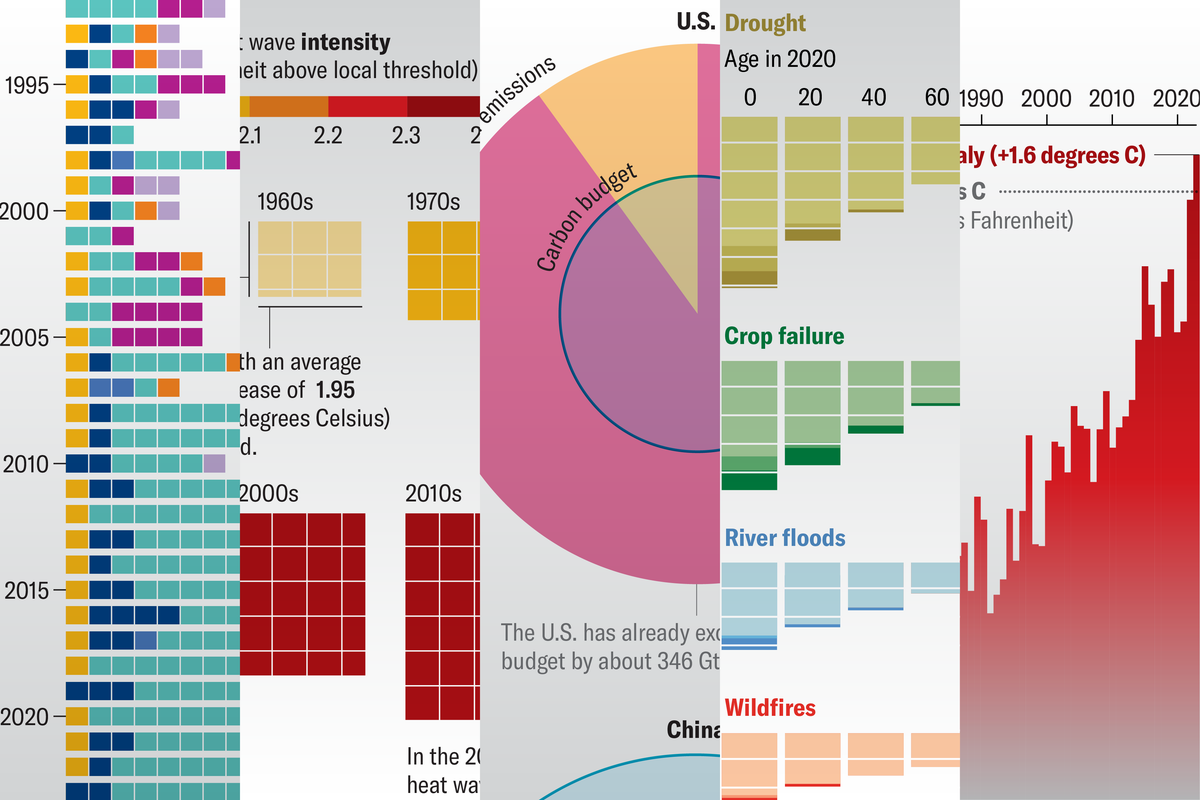

To test PLATO’s ability to understand five physical concepts such as persistence (that an object tends not to vanish), solidity and unchangingness (that an object tends to retain features like shape and colour), the researchers used another series of simulated videos. Some showed objects obeying the laws of physics, while others depicted nonsensical actions, such as a ball rolling behind a pillar, not emerging from the other side, but then reappearing from behind another pillar further along its route.

They tasked PLATO to predict what would happen next in each video, and found that its predictions were reliably wrong for nonsensical videos, but usually correct for logical ones, suggesting the AI has an intuitive knowledge of physics.

Piloto says the results show that an object-centric view of the world could give an AI a more generalised and adaptable set of abilities. “If you consider, for instance, all the different scenes that an apple might be in,” he says. “You don’t have to learn about an apple on a tree, versus an apple in your kitchen, versus an apple in the garbage. When you kind of isolate the apple as its own thing, you’re in a better position to generalise how it behaves in new systems, in new contexts. It provides learning efficiency.”

Mark Nixon at the University of Southampton, UK, says the work could lead to new avenues of AI research, and may even reveal clues about human vision and development. But he expressed concerns about reproducibility because the paper says that “our implementation of PLATO is not externally viable”.

“That means they’re using an architecture that other people probably can’t use,” he says. “In science, it’s good to be reproducible so that other people can get the same results and then take them further.”

Chen Feng at New York University says the findings could help to lower the computational requirements for training and running AI models.

“This is somewhat like teaching a kid what a car is by first teaching them what wheels and seats are,” he says. “The benefit of using object-centric representation, instead of raw visual inputs, makes AI learn intuitive physical concepts with better data efficiency.”

Journal reference: Nature Human Behaviour, DOI: 10.1038/s41562-022-01394-8

More on these topics: