International efforts to legally control the use of “killer robots” are faltering because of Russia’s invasion of Ukraine

Technology

13 July 2022

A Ukrainian soldier observes the area near a destroyed bridge DIMITAR DILKOFF/AFP via Getty Images

International attempts to regulate the use of autonomous weapons, sometimes called “killer robots”, are faltering and may be derailed if such weapons are used in Ukraine and seen to be effective.

No country is known to have used autonomous weapons yet. Their potential use is controversial because they would select and attack targets without human oversight. Arms control groups are campaigning for the creation of binding international agreements to cover their use, like the ones we have for chemical and biological weapons, before they are deployed. Progress is being stymied by world events, however.

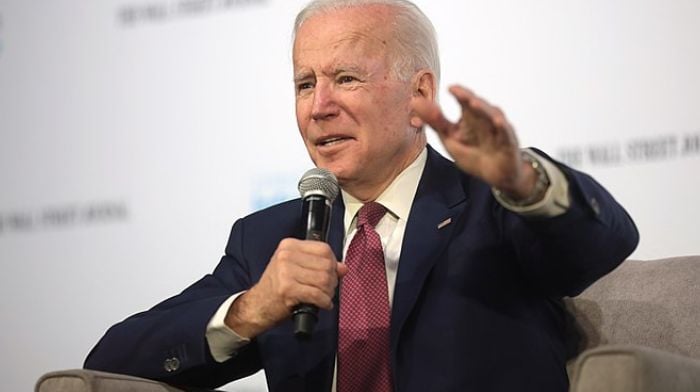

A United Nations’ Group of Governmental Experts is holding its final meeting on autonomous weapons from 25 to 29 July. The group has been looking at the issue since 2017, and according to insiders, there is still no agreement. Russia opposes international legal controls and is now boycotting the discussions, for reasons relating to its invasion of Ukraine, making unanimous agreement impossible.

“The United Nations process operates under a consensus mechanism, so there is no chance for a blanket ban on autonomous weapons,” says Gregory Allen at the Center for Strategic and International Studies in Washington DC. Yet there could still be a way for progress, he says, through the widespread adoption of a code of conduct.

Such a code could be based on Directive 3000.09, the first national policy on autonomous weapons, which was introduced in the US in 2012. This is being revisited under a Pentagon requirement to revise rules every 10 years.

Allen says Directive 3000.09 is widely misunderstood as outlawing autonomous weapons in the US. It actually sets out criteria such weapons have to meet and a demanding approval process, with the aim of minimising risk to friendly forces or civilians. There are exceptions, such as anti-missile systems and landmines. Allen says the rules are so exacting – requiring sign-off from the US military’s highest-ranking officer, for example – that no system has yet been submitted for review.

If Russia uses autonomous weapons in Ukraine, attitudes may change. Russia has KUB loitering munitions that can be used in autonomous mode. These have the ability to wait in a specified area and attack when a target is detected by the device. Allen doubts they have been used autonomously, but says Russia sent similar Lancet loitering munitions to its troops in Ukraine in June. These have the ability to find and attack targets without oversight. There are no reports that either have been used in autonomous mode.

“I can guarantee, if Russia deploys these weapons, some people in the US government will ask ‘do we now, or will we later, need comparable capabilities for effective deterrence?’,” says Allen.

Mark Gubrud at the University of North Carolina at Chapel Hill also says that any reports of autonomous weapons being used in Ukraine will increase Western enthusiasm for such weapons or resistance to calls for arms control.

Allen says Lancet munitions are unlikely to meet the criteria of Directive 3000.09, which requires less than 1 in a million errors. This is easier for anti-aircraft missiles to achieve than for weapons like Lancet, which need to distinguish military trucks from many other vehicles like school buses.

The Switchblade 300 miniature aerial missile system Cpl. Alexis Moradian/US Marine Corps/Alamy Live News

Forthcoming weapons might meet the criteria. Drone-maker AeroVironment has demonstrated an automatic target-recognition system it says can identify 32 types of tank. This could be used to convert its operator-controlled Switchblade loitering munitions to autonomous. Switchblades are currently being supplied to Ukraine, and could be used to hit Russian artillery positions from long range. AeroVironment acknowledges that fielding such a weapon autonomously raises ethical issues.

Allen says the extensive use in Ukraine of radio-frequency jamming, which breaks contact between human operators and drones, will increase the interest in autonomous weapons, which don’t need a link to be maintained.

Gubrud doubts whether Michael Horowitz, the director of the Pentagon’s Emerging Capabilities Policy Office, who is leading the review of Directive 3000.09, will call for stricter rules.

Allen also says the review of the directive probably won’t change much, but may add details on issues like machine learning and when advances require a system to be reassessed.

The US Department of Defense didn’t respond to a request for comment before publication.

In the absence of binding international laws or codes of conduct, autonomous weapons will continue to develop. Conflicts such as the one in Ukraine will drive demand for new and better weapons, and it may be only a matter of time before killer robots advance onto the battlefield.

More on these topics: