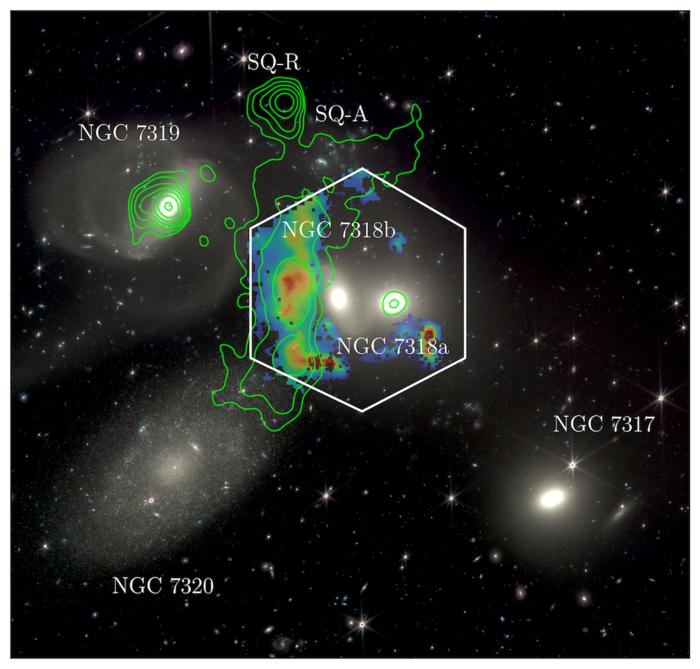

Researchers have developed the largest-ever dataset of biological images suitable for use by machine learning – and a new vision-based artificial intelligence tool to learn from it.

The findings in the new study significantly broaden the scope of what scientists can do using artificial intelligence to analyze images of plants, animals and fungi to answer new questions, said co-author of the study and an assistant professor of computer science and engineering at Ohio State, is their model’s ability to learn fine-tuned representations of images, or being able to tell the difference between similar-looking organisms within the same species and one species mimicking their appearance.

Whereas general computer vision models are useful for comparing common organisms like dogs and wolves, previous studies have revealed that they can’t take note of the subtle differences between two species of the same plant genus.

Because of its better grasp of nuance, said Su, the model in this paper is also uniquely qualified to make determinations on rare and unseen species as well.

“BioCLIP covers many orders of magnitude more species and taxa than the previously publicly available for general vision models,” he said. “Even when it has not seen a certain species before, it can come to a reasonable conclusion about how if this organism looks similar to this, then it’s likely that.”

As AI continues to advance, the study concludes, machine learning models like this one could soon become important tools for unraveling biological mysteries that would otherwise take much longer to understand. And while this first iteration of BioCLIP relied heavily on images and information from citizen science platforms, Stevens said future models could be upgraded by including more images and data from scientific labs and museums. Because labs are able to collect richer textual descriptions of species that detail their morphological features and other subtle differences between closely related species, such resources will provide a bevy of important information for the AI model.

In addition, many scientific labs have information on the fossils of extinct species, which the team expects will also broaden the model’s usefulness.

“Taxonomies are always changing as we update names and new species, so one thing we’d like to do in the future is leverage existing work much more heavily on how to integrate them,” he said. “In AI, when you throw more data at a problem, you’re going to get better results, so I think there’s a bigger version we can continue to train into a larger, stronger model.”

The study was supported by the National Science Foundation and the Ohio Supercomputer Center. Other Ohio State co-authors include Jiaman Wu, Matthew J. Thompson, Elizabeth G. Campolongo, Chan Hee Song, David Edward Carlyn, Tanya Berger-Wolf and Wei-Lun Chao. Li Dong from Microsoft Research, Wasila M Dahdul from the University of California, Irvine, and Charles Stewart from the Rensselaer Polytechnic Institute also contributed.

Related

The material in this press release comes from the originating research organization. Content may be edited for style and length. Want more? Sign up for our daily email.