Researchers experimenting with OpenAI’s text-to-image tool, DALL-E 2, noticed that it seems to covertly be adding words such as “black” and “female” to image prompts, seemingly in an effort to diversify its output

Technology

22 July 2022

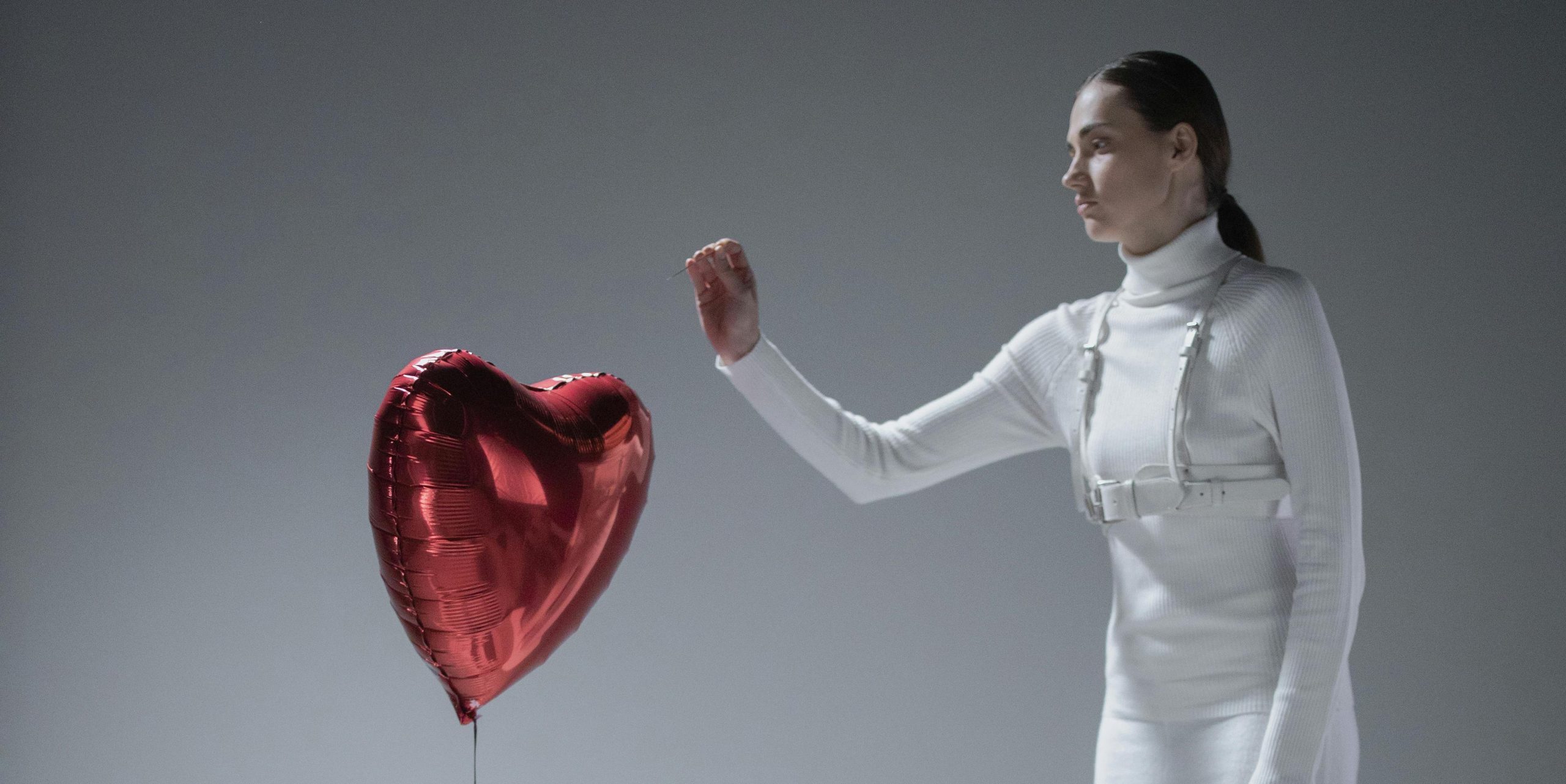

DALL-E 2 is a text-to-image generating artificial intelligence STEFANI REYNOLDS/AFP via Getty Images

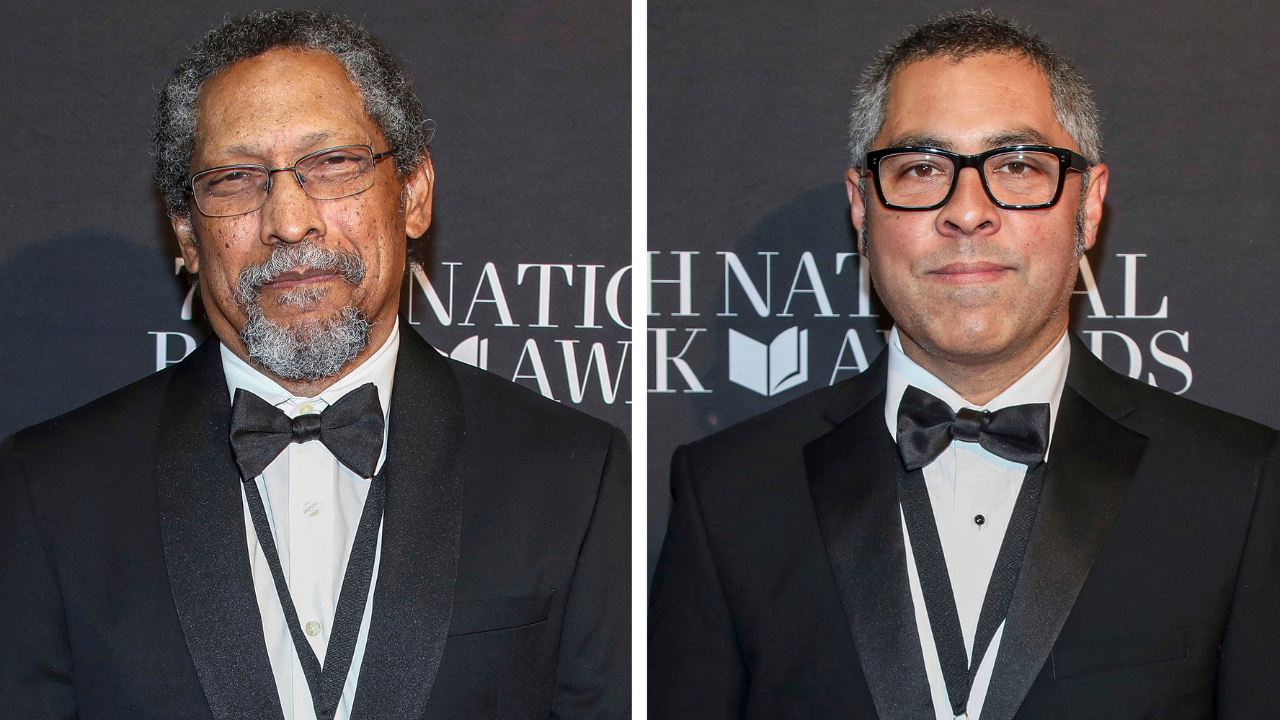

Artificial intelligence firm OpenAI seems to be covertly modifying requests to DALL-E 2, its advanced text-to-image AI, in an attempt to make it appear that the model is less racially and gender biased. Users have discovered that keywords such as “black” or “female” are being added to the prompts given to the AI, without their knowledge.

It is well known that AIs can inherit human prejudices through training on biased data sets, often gathered by hoovering up data from the internet. For …