Research in the field of machine learning and AI, now a key technology in practically every industry and company, is far too voluminous for anyone to read it all. This column, Perceptron (previously Deep Science), aims to collect some of the most relevant recent discoveries and papers — particularly in, but not limited to, artificial intelligence — and explain why they matter.

This week in AI, researchers discovered a method that could allow adversaries to track the movements of remotely-controlled robots even when the robots’ communications are encrypted end-to-end. The coauthors, who hail from the University of Strathclyde in Glasgow, said that their study shows adopting the best cybersecurity practices isn’t enough to stop attacks on autonomous systems.

Remote control, or teleoperation, promises to enable operators to guide one or several robots from afar in a range of environments. Startups including Pollen Robotics, Beam, and Tortoise have demonstrated the usefulness of teleoperated robots in grocery stores, hospitals, and offices. Other companies develop remotely-controlled robots for tasks like bomb disposal or surveying sites with heavy radiation.

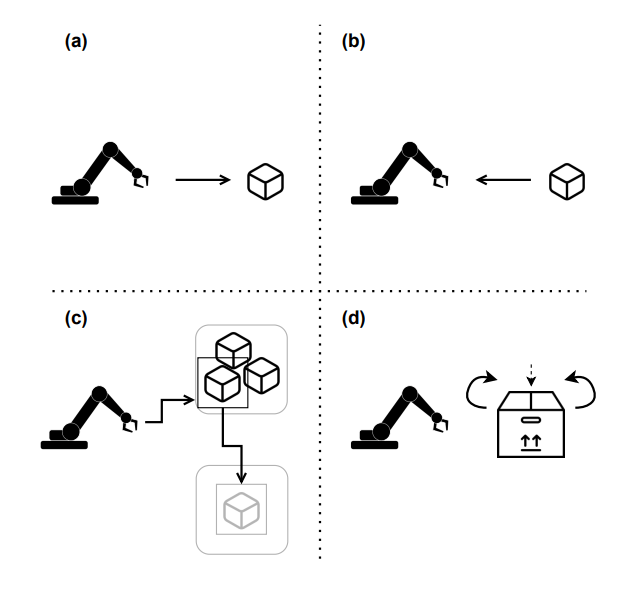

But the new research shows that teleoperation, even when supposedly “secure,” is risky in its susceptibility to surveillance. The Strathclyde coauthors describe in a paper using a neural network to infer information about what operations a remotely-controlled robot is carrying out. After collecting samples of TLS-protected traffic between the robot and controller and conducting an analysis, they found that the neural network could identify movements about 60% of the time and also reconstruct “warehousing workflows” (e.g., picking up packages) with “high accuracy.”

Image Credits: Shah et al.

Alarming in a less immediate way is a new study from researchers at Google and the University of Michigan that explored peoples’ relationships with AI-powered systems in countries with weak legislation and “nationwide optimism” for AI. The work surveyed India-based, “financially stressed” users of instant loan platforms that target borrowers with credit determined by risk-modeling AI. According to the coauthors, the users experienced feelings of indebtedness for the “boon” of instant loans and an obligation to accept harsh terms, overshare sensitive data, and pay high fees.

The researchers argue that the findings illustrate the need for greater “algorithmic accountability,” particularly where it concerns AI in financial services. “We argue that accountability is shaped by platform-user power relations, and urge caution to policymakers in adopting a purely technical approach to fostering algorithmic accountability,” they wrote. “Instead, we call for situated interventions that enhance agency of users, enable meaningful transparency, reconfigure designer-user relations, and prompt a critical reflection in practitioners towards wider accountability.”

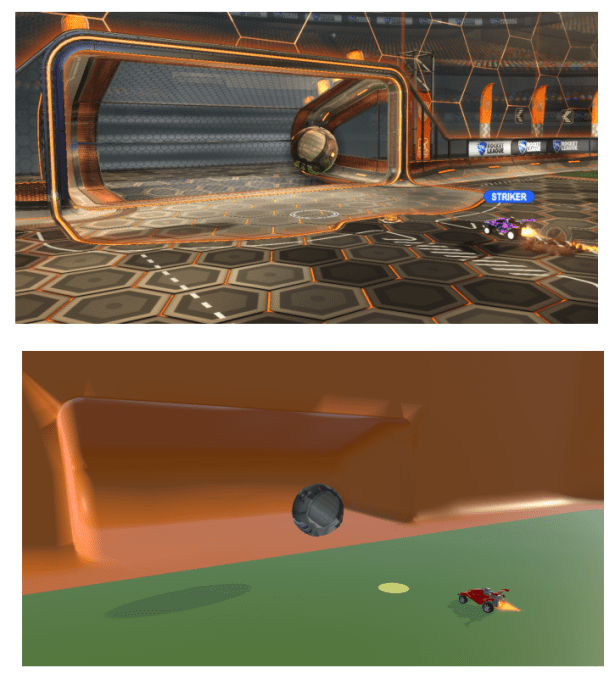

In less dour research, a team of scientists at TU Dortmund University, Rhine-Waal University, and LIACS Universiteit Leiden in the Netherlands developed an algorithm that they claim can “solve” the game Rocket League. Motivated to find a less computationally-intensive way to create game-playing AI, the team leveraged what they call a “sim-to-sim” transfer technique, which trained the AI system to perform in-game tasks like goalkeeping and striking within a stripped-down, simplified version of Rocket League. (Rocket League basically resembles indoor soccer, except with cars instead of human players in teams of three.)

Image Credits: Pleines et al.

It wasn’t perfect, but the researchers’ Rocket League-playing system, managed to save nearly all shots fired its way when goalkeeping. When on the offensive, the system successfully scored 75% of shots — a respectable record.

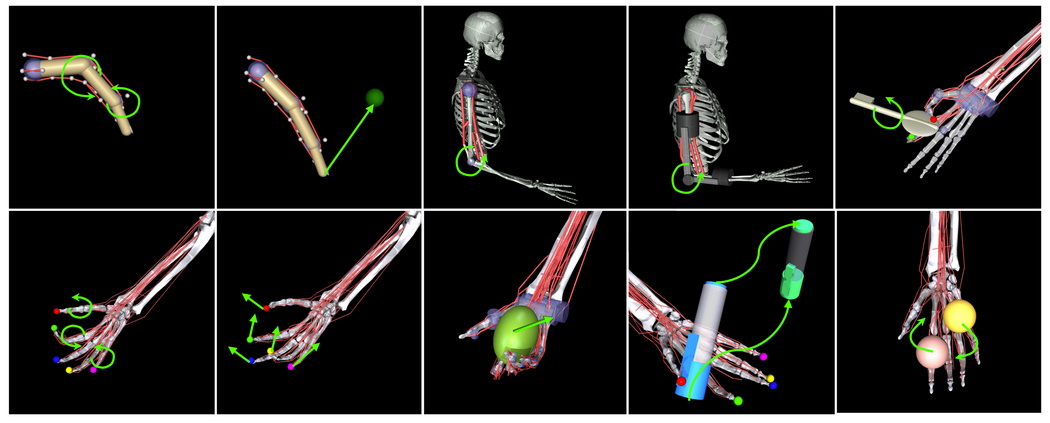

Simulators for human movements are also advancing at pace. Meta’s work on tracking and simulating human limbs has obvious applications in its AR and VR products, but it could also be used more broadly in robotics and embodied AI. Research that came out this week got a tip of the cap from none other than Mark Zuckerberg.

Simulated skeleton and muscle groups in Myosuite.

MyoSuite simulates muscles and skeletons in 3D as they interact with objects and themselves — this is important for agents to learn how to properly hold and manipulate things without crushing or dropping them, and also in a virtual world provides realistic grips and interactions. It supposedly runs thousands of times faster on certain tasks, which lets simulated learning processes happen much quicker. “We’re going to open source these models so researchers can use them to advance the field further,” Zuck says. And they did!

Lots of these simulations are agent- or object-based, but this project from MIT looks at simulating an overall system of independent agents: self-driving cars. The idea is that if you have a good amount of cars on the road, you can have them work together not just to avoid collisions, but to prevent idling and unnecessary stops at lights.

If you look closely, only the front cars ever really stop.

As you can see in the animation above, a set of autonomous vehicles communicating using v2v protocols can basically prevent all but the very front cars from stopping at all by progressively slowing down behind one another, but not so much that they actually come to a halt. This sort of hypermiling behavior may seem like it doesn’t save much gas or battery, but when you scale it up to thousands or millions of cars it does make a difference — and it might be a more comfortable ride, too. Good luck getting everyone to approach the intersection perfectly spaced like that, though.

Switzerland is taking a good, long look at itself — using 3D scanning tech. The country is making a huge map using UAVs equipped with lidar and other tools, but there’s a catch: the movement of the drone (deliberate and accidental) introduces error into the point map that needs to be manually corrected. Not a problem if you’re just scanning a single building, but an entire country?

Fortunately, a team out of EPFL is integrating an ML model directly into the lidar capture stack that can determine when an object has been scanned multiple times from different angles and use that info to line up the point map into a single cohesive mesh. This news article isn’t particularly illuminating, but the paper accompanying it goes into more detail. An example of the resulting map is visible in the video above.

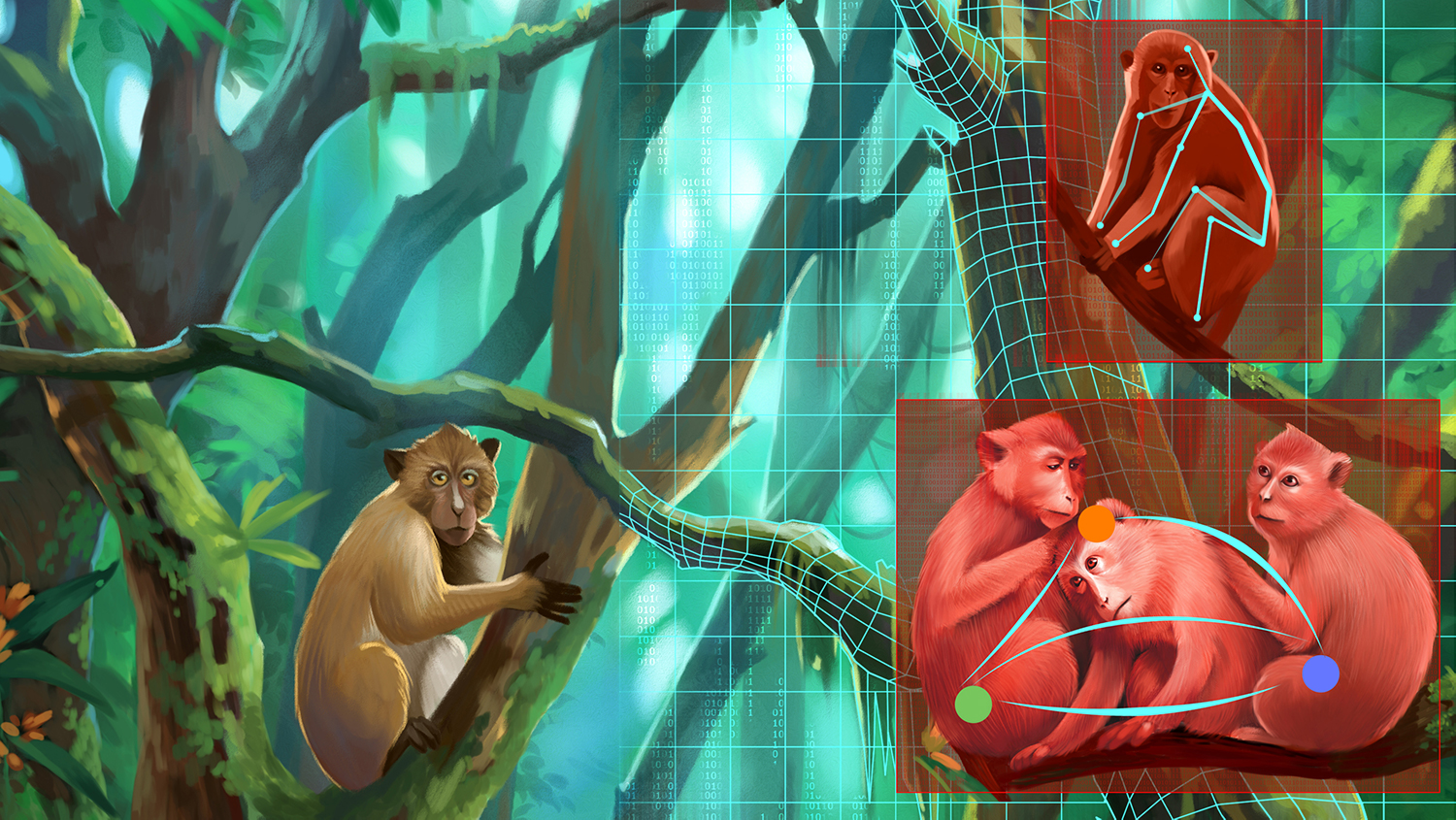

Lastly, in unexpected but highly pleasant AI news, a team from the University of Zurich has designed an algorithm for tracking animal behavior so zoologists don’t have to scrub through weeks of footage to find the two examples of courting dances. It’s a collaboration with the Zurich Zoo, which makes sense when you consider the following: “Our method can recognize even subtle or rare behavioral changes in research animals, such as signs of stress, anxiety or discomfort,” said lab head Mehmet Fatih Yanik.

So the tool could be used both for learning and tracking behaviors in captivity, for the well-being of captive animals in zoos, and for other forms of animal studies as well. They could use fewer subject animals and get more information in a shorter time, with less work by grad students poring over video files late into the night. Sounds like a win-win-win-win situation to me.

Image Credits: Ella Marushenko / ETH Zurich

Also, love the illustration.