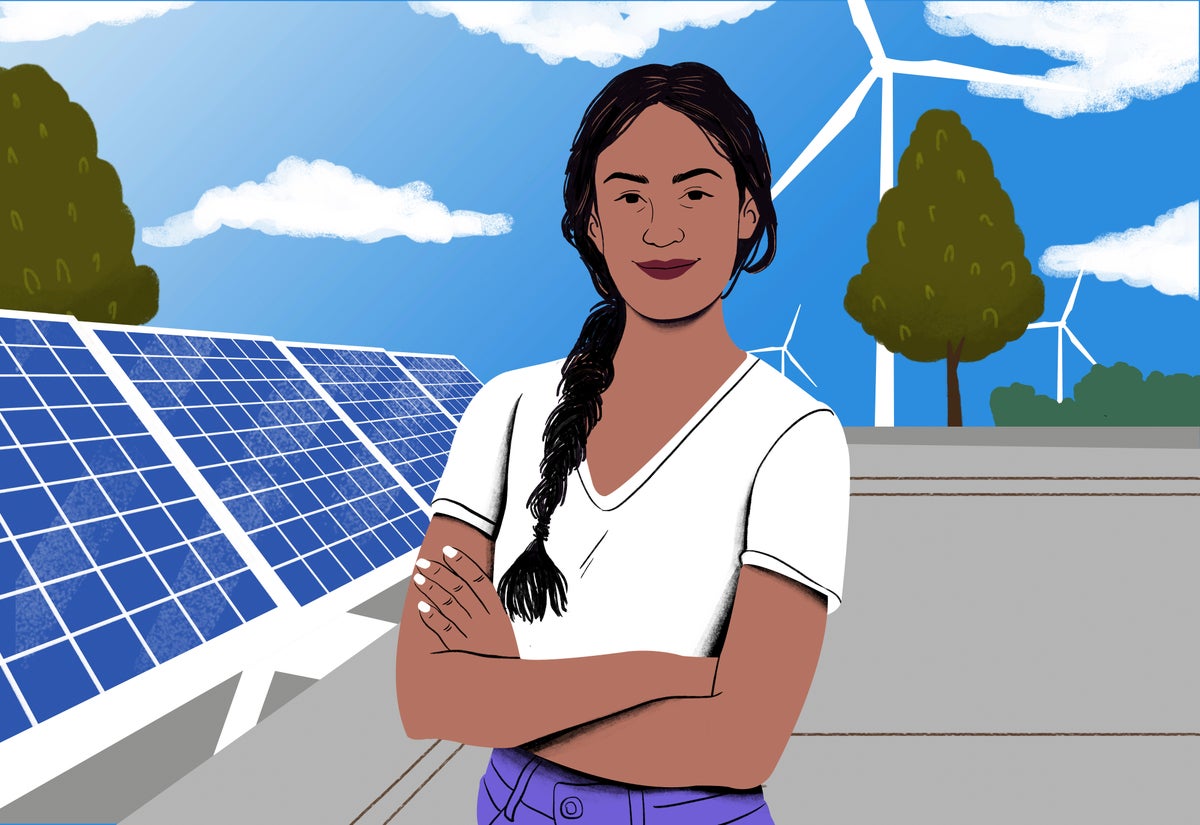

Imagen, from Google, is the latest example of an AI seemingly able to produce high-quality images from a text prompt – but they aren’t quite ready to replace human illustrators

Technology

| Analysis

26 May 2022

Examples of images created by Google’s Imagen AI Imagen/Google

Tech firms are racing to create artificial intelligence algorithms that can produce high-quality images from text prompts, with the technology seeming to advance so quickly that some predict that human illustrators and stock photographers will soon be out of a job. In reality, limitations with these AI systems mean it will probably be a while before they can be used by the general public.

Text-to-image generators that use neural networks have made remarkable progress in recent years. The latest, Imagen from Google, comes hot on the heels of DALL-E 2, which was announced by OpenAI in April.

Both models use a neural network that is trained on a large number of examples to categorise how images relate to text descriptions. When given a new text description, the neural network repeatedly generates images, altering them until they most closely match the text based on what it has learned.

While the images presented by both firms are impressive, researchers have questioned whether the results are being cherry-picked to show the systems in the best light. “You need to present your best results,” says Hossein Malekmohamadi at De Montfort University in the UK.

One problem in judging these AI creations is that both firms have declined to release public demos that would allow researchers and others to put them through their paces. Part of the reason for this is a fear that the AI could be used to create misleading images, or simply that it could generate harmful results.

The models rely on data sets scraped from large, unmoderated portions of the internet, such as the LAION-400M data set, which Google says is known to contain “pornographic imagery, racist slurs, and harmful social stereotypes”. The researchers behind Imagen say that because they can’t guarantee it won’t inherit some of this problematic content, they can’t release it to the public.

OpenAI claims to be improving DALL-E 2’s “safety system” by “refining the text filters and tuning the automated detection & response system for content policy violations”, while Google is seeking to address the challenges by developing a “vocabulary of potential harms”. Neither firm was able to speak to New Scientist before publication of this article.

Unless these problems can be solved, it seems unlikely that big research teams like Google or OpenAI will offer their text-to-image systems for general use. It is possible that smaller teams could choose to release similar technology, but the sheer amount of computing power required to train these models on huge data sets tends to limit work on them to big players.

Despite this, the friendly competition between the big firms is likely to mean the technology continues to advance rapidly, as tools developed by one group can be incorporated into another’s future model. For example, diffusion models, where neural networks learn how to reverse the process of adding random pixels to an image in an effort to improve them, have shown promise in machine-learning models in the past year. Both DALL-E 2 and Imagen rely on diffusion models, after the technique proved effective in less-powerful models, such as OpenAI’s Glide image generator.

“For these types of algorithms, when you have a very strong competitor, it means that it helps you build your model better than those other ones,” says Malekmohamadi. “For example, Google has multiple teams working on the same type of [AI] platform.”

More on these topics: